!pip install torchtext torch==2.3.0 datasets tokenizers torchmetrics tensorboard altair --upgrade

Let's build a translaition ng model using the Transformer architecture¶

Import libraries¶

%matplotlib inline

import torch

import torch.nn as nn

import math

from torch.utils.data import Dataset, DataLoader, random_split

from torch.optim.lr_scheduler import LambdaLR

import warnings

from tqdm import tqdm

import os

from pathlib import Path

# Huggingface datasets and tokenizers

from datasets import load_dataset

from tokenizers import Tokenizer

from tokenizers.models import WordLevel

from tokenizers.trainers import WordLevelTrainer

from tokenizers.pre_tokenizers import Whitespace

import torchmetrics

from torch.utils.tensorboard import SummaryWriter

import numpy as np

import matplotlib.pyplot as plt

import os

import sys

print('Using PyTorch version:', torch.__version__)

if torch.backends.mps.is_available():

device = torch.device("mps")

else:

device = torch.device("cpu")

import torchtext; torchtext.disable_torchtext_deprecation_warning()

import torchtext.datasets as datasets

from pathlib import Path

Using PyTorch version: 2.3.0

Define the configuration¶

def get_config():

return {

"batch_size": 8,

"num_epochs": 10,

"lr": 10**-4,

"seq_len": 350,

"d_model": 512,

"datasource": 'opus_books',

"lang_src": "en",

"lang_tgt": "it",

"model_folder": "weights",

"model_basename": "tmodel_",

"preload": "latest",

"tokenizer_file": "tokenizer_{0}.json",

"experiment_name": "runs/tmodel"

}

def get_weights_file_path(config, epoch: str):

model_folder = f"{config['datasource']}_{config['model_folder']}"

model_filename = f"{config['model_basename']}{epoch}.pt"

return str(Path('.') / model_folder / model_filename)

# Find the latest weights file in the weights folder

def latest_weights_file_path(config):

model_folder = f"{config['datasource']}_{config['model_folder']}"

model_filename = f"{config['model_basename']}*"

weights_files = list(Path(model_folder).glob(model_filename))

if len(weights_files) == 0:

return None

weights_files.sort()

return str(weights_files[-1])

Embeddings Layer¶

Vectors of size 512 for each token. d_model is the size of the embedding, 512. vocab_size is the size of the vocabulary. how many distinct tokens.

class InputEmbeddings(nn.Module):

def __init__(self, d_model: int, vocab_size: int) -> None:

super().__init__()

self.d_model = d_model

self.vocab_size = vocab_size

self.embedding = nn.Embedding(vocab_size, d_model)

def forward(self, x):

# (batch, seq_len) --> (batch, seq_len, d_model)

# Multiply by sqrt(d_model) to scale the embeddings according to the paper

return self.embedding(x) * math.sqrt(self.d_model)

Positional encodings¶

Another layer, vectors of size 512. seq_len is the max context size. One positional encoding for each token of the context. Positional encodings are not learned parameters of the model, hence the requires_grad_ below.

class PositionalEncoding(nn.Module):

def __init__(self, d_model: int, seq_len: int, dropout: float) -> None:

super().__init__()

self.d_model = d_model

self.seq_len = seq_len

self.dropout = nn.Dropout(dropout)

# Create a matrix of shape (seq_len, d_model)

pe = torch.zeros(seq_len, d_model)

# Create a vector of shape (seq_len)

position = torch.arange(0, seq_len, dtype=torch.float).unsqueeze(1) # (seq_len, 1)

# Create a vector of shape (d_model)

div_term = torch.exp(torch.arange(0, d_model, 2).float() * (-math.log(10000.0) / d_model)) # (d_model / 2)

# Apply sine to even indices

pe[:, 0::2] = torch.sin(position * div_term) # sin(position * (10000 ** (2i / d_model))

# Apply cosine to odd indices

pe[:, 1::2] = torch.cos(position * div_term) # cos(position * (10000 ** (2i / d_model))

# Add a batch dimension to the positional encoding

pe = pe.unsqueeze(0) # (1, seq_len, d_model)

# Register the positional encoding as a buffer

self.register_buffer('pe', pe)

def forward(self, x):

x = x + (self.pe[:, :x.shape[1], :]).requires_grad_(False) # (batch, seq_len, d_model)

return self.dropout(x)

Layer normalization¶

If you have a batch of 3, each item has some features; e.g. a batch of sentences where each sentence has multiple words/tokens and each item (word/token) has an embedding in the form of a list of number.

The layer norm calculates a mean and a variance for each item, independent of the other items. Then, it normalizes each item by the mean and variance of that item.

It also introduces a betta and a gamma parameters to introduce flunctuations in the data, allow the model to amplify or reduce values beyond the 0,1 interval that could be too restrictive.

class LayerNormalization(nn.Module):

def __init__(self, features: int, eps:float=10**-6) -> None:

super().__init__()

self.eps = eps

self.alpha = nn.Parameter(torch.ones(features)) # alpha is a learnable parameter

self.bias = nn.Parameter(torch.zeros(features)) # bias is a learnable parameter

def forward(self, x):

# x: (batch, seq_len, hidden_size)

# Keep the dimension for broadcasting

mean = x.mean(dim = -1, keepdim = True) # (batch, seq_len, 1)

# Keep the dimension for broadcasting

std = x.std(dim = -1, keepdim = True) # (batch, seq_len, 1)

# eps is to prevent dividing by zero or when std is very small

return self.alpha * (x - mean) / (std + self.eps) + self.bias

Feed forward layer¶

It's a Linear Layer, followed by a ReLU activation function, followed by another Linear Layer Dropout function to avoid overfitting.

class FeedForwardBlock(nn.Module):

def __init__(self, d_model: int, d_ff: int, dropout: float) -> None:

super().__init__()

self.linear_1 = nn.Linear(d_model, d_ff) # w1 and b1

self.dropout = nn.Dropout(dropout)

self.linear_2 = nn.Linear(d_ff, d_model) # w2 and b2

def forward(self, x):

# (batch, seq_len, d_model) --> (batch, seq_len, d_ff) --> (batch, seq_len, d_model)

return self.linear_2(self.dropout(torch.relu(self.linear_1(x))))

Multi-Head Attention Layer¶

We have an input that is processed 3 times:

- By a key: Input(seq, d_model) @ W_k(d_model, d_model) = Q`(seq, d_model)

- By a query: Input(seq, d_model) @ W_q(d_model, d_model) = K`(seq, d_model)

- And by a value: Input(seq, d_model) @ W_v(d_model, d_model) = V`(seq, d_model)

Q, K and V` are then split into h chunks along the embedding dimension. This way, each head has access to the full sentence, but a different subset of the embedding.

Then the attention formula is applied to each head, resulting $head_1 ... head_h$. This is the formula: $$Attention(Q,K,V) = softmax(\frac{QK^T}{\sqrt{d_k}})V$$

Then we concat the resulting $head_1 ... head_h$ $$MultiHead(Q,K,V)=Concat(head_1... head_h)W^0$$

- seq = sequence length (number of words in a sentence, the max context length)

- d_model = dimensionality of the embedding vector (number of features in a word)

- h = number of heads (number of times we want to split the embedding vector in chunks)

class MultiHeadAttentionBlock(nn.Module):

def __init__(self, d_model: int, h: int, dropout: float) -> None:

super().__init__()

self.d_model = d_model # Embedding vector size

self.h = h # Number of heads

# Make sure d_model is divisible by h

assert d_model % h == 0, "d_model is not divisible by h"

self.d_k = d_model // h # Dimension of vector seen by each head

self.w_q = nn.Linear(d_model, d_model, bias=False) # Wq

self.w_k = nn.Linear(d_model, d_model, bias=False) # Wk

self.w_v = nn.Linear(d_model, d_model, bias=False) # Wv

self.w_o = nn.Linear(d_model, d_model, bias=False) # Wo

self.dropout = nn.Dropout(dropout)

@staticmethod

def attention(query, key, value, mask, dropout: nn.Dropout):

d_k = query.shape[-1]

# Just apply the formula from the paper

# (batch, h, seq_len, d_k) --> (batch, h, seq_len, seq_len)

attention_scores = (query @ key.transpose(-2, -1)) / math.sqrt(d_k)

if mask is not None:

# Write a very low value (indicating -inf) to the positions where mask == 0

attention_scores.masked_fill_(mask == 0, -1e9)

attention_scores = attention_scores.softmax(dim=-1) # (batch, h, seq_len, seq_len) # Apply softmax

if dropout is not None:

attention_scores = dropout(attention_scores)

# (batch, h, seq_len, seq_len) --> (batch, h, seq_len, d_k)

# return attention scores which can be used for visualization

return (attention_scores @ value), attention_scores

def forward(self, q, k, v, mask):

query = self.w_q(q) # (batch, seq_len, d_model) --> (batch, seq_len, d_model)

key = self.w_k(k) # (batch, seq_len, d_model) --> (batch, seq_len, d_model)

value = self.w_v(v) # (batch, seq_len, d_model) --> (batch, seq_len, d_model)

# (batch, seq_len, d_model) --> (batch, seq_len, h, d_k) --> (batch, h, seq_len, d_k)

query = query.view(query.shape[0], query.shape[1], self.h, self.d_k).transpose(1, 2)

key = key.view(key.shape[0], key.shape[1], self.h, self.d_k).transpose(1, 2)

value = value.view(value.shape[0], value.shape[1], self.h, self.d_k).transpose(1, 2)

# Calculate attention

x, self.attention_scores = MultiHeadAttentionBlock.attention(query, key, value, mask, self.dropout)

# Combine all the heads together

# (batch, h, seq_len, d_k) --> (batch, seq_len, h, d_k) --> (batch, seq_len, d_model)

x = x.transpose(1, 2).contiguous().view(x.shape[0], -1, self.h * self.d_k)

# Multiply by Wo

# (batch, seq_len, d_model) --> (batch, seq_len, d_model)

return self.w_o(x)

Residual Connection Layer¶

This is a skip connection. The skip connection is between the Add&Norm and the previous layer.

class ResidualConnection(nn.Module):

def __init__(self, features: int, dropout: float) -> None:

super().__init__()

self.dropout = nn.Dropout(dropout)

self.norm = LayerNormalization(features)

def forward(self, x, sublayer):

return x + self.dropout(sublayer(self.norm(x)))

The Encoder Block¶

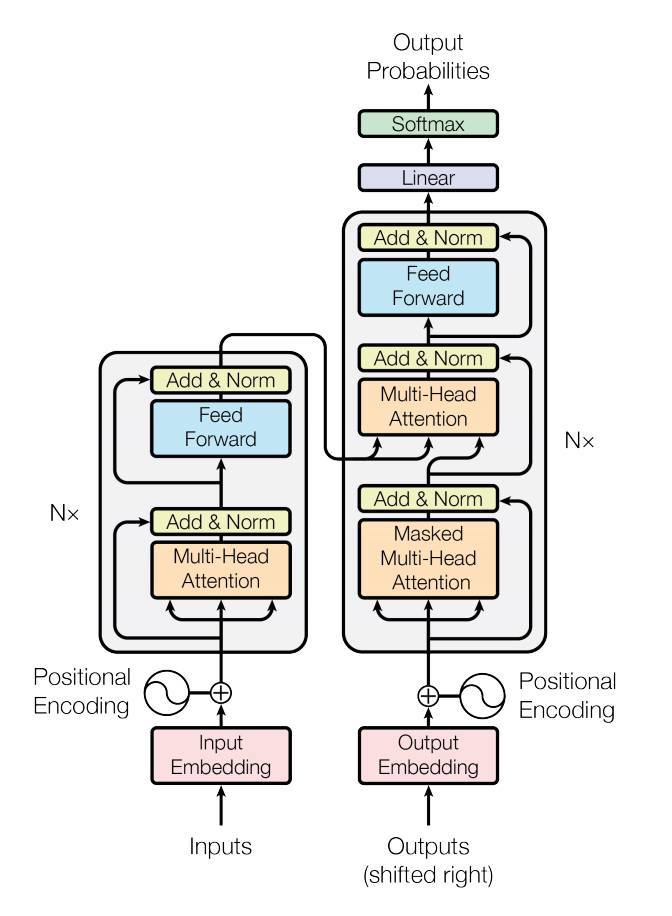

As depicted in the figure above, the encoder block is a repetition of N encoder layers where the output of each layer is the input to the next layer. Then, the output of the last layer is the input of the decoder block.

class EncoderBlock(nn.Module):

def __init__(self, features: int, self_attention_block: MultiHeadAttentionBlock, feed_forward_block: FeedForwardBlock, dropout: float) -> None:

super().__init__()

self.self_attention_block = self_attention_block

self.feed_forward_block = feed_forward_block

self.residual_connections = nn.ModuleList([ResidualConnection(features, dropout) for _ in range(2)])

def forward(self, x, src_mask):

x = self.residual_connections[0](x, lambda x: self.self_attention_block(x, x, x, src_mask))

x = self.residual_connections[1](x, self.feed_forward_block)

return x

class Encoder(nn.Module):

def __init__(self, features: int, layers: nn.ModuleList) -> None:

super().__init__()

self.layers = layers

self.norm = LayerNormalization(features)

def forward(self, x, mask):

for layer in self.layers:

x = layer(x, mask)

return self.norm(x)

Decoder¶

First attention block is a self-attention block, similar to encoder block but with a different mask.

The second attention block is a cross-attention block that takes the output of the first block as input and the encoder output as key/value.

class DecoderBlock(nn.Module):

def __init__(self, features: int, self_attention_block: MultiHeadAttentionBlock, cross_attention_block: MultiHeadAttentionBlock, feed_forward_block: FeedForwardBlock, dropout: float) -> None:

super().__init__()

self.self_attention_block = self_attention_block

self.cross_attention_block = cross_attention_block

self.feed_forward_block = feed_forward_block

self.residual_connections = nn.ModuleList([ResidualConnection(features, dropout) for _ in range(3)])

def forward(self, x, encoder_output, src_mask, tgt_mask):

x = self.residual_connections[0](x, lambda x: self.self_attention_block(x, x, x, tgt_mask))

x = self.residual_connections[1](x, lambda x: self.cross_attention_block(x, encoder_output, encoder_output, src_mask))

x = self.residual_connections[2](x, self.feed_forward_block)

return x

class Decoder(nn.Module):

def __init__(self, features: int, layers: nn.ModuleList) -> None:

super().__init__()

self.layers = layers

self.norm = LayerNormalization(features)

def forward(self, x, encoder_output, src_mask, tgt_mask):

for layer in self.layers:

x = layer(x, encoder_output, src_mask, tgt_mask)

return self.norm(x)

The projection layer¶

The layer that maps the output of the decoder to the target vocabulary size. That's in order to apply the softmax and pick the next word/token.

class ProjectionLayer(nn.Module):

def __init__(self, d_model, vocab_size) -> None:

super().__init__()

self.proj = nn.Linear(d_model, vocab_size)

def forward(self, x) -> None:

# (batch, seq_len, d_model) --> (batch, seq_len, vocab_size)

return self.proj(x)

The transformer block¶

class Transformer(nn.Module):

def __init__(self, encoder: Encoder, decoder: Decoder, src_embed: InputEmbeddings, tgt_embed: InputEmbeddings, src_pos: PositionalEncoding, tgt_pos: PositionalEncoding, projection_layer: ProjectionLayer) -> None:

super().__init__()

self.encoder = encoder

self.decoder = decoder

self.src_embed = src_embed

self.tgt_embed = tgt_embed

self.src_pos = src_pos

self.tgt_pos = tgt_pos

self.projection_layer = projection_layer

def encode(self, src, src_mask):

# (batch, seq_len, d_model)

src = self.src_embed(src)

src = self.src_pos(src)

return self.encoder(src, src_mask)

def decode(self, encoder_output: torch.Tensor, src_mask: torch.Tensor, tgt: torch.Tensor, tgt_mask: torch.Tensor):

# (batch, seq_len, d_model)

tgt = self.tgt_embed(tgt)

tgt = self.tgt_pos(tgt)

return self.decoder(tgt, encoder_output, src_mask, tgt_mask)

def project(self, x):

# (batch, seq_len, vocab_size)

return self.projection_layer(x)

## Build the transformer

def build_transformer(src_vocab_size: int, tgt_vocab_size: int, src_seq_len: int, tgt_seq_len: int, d_model: int=512, N: int=6, h: int=8, dropout: float=0.1, d_ff: int=2048) -> Transformer:

# Create the embedding layers

src_embed = InputEmbeddings(d_model, src_vocab_size)

tgt_embed = InputEmbeddings(d_model, tgt_vocab_size)

# Create the positional encoding layers

src_pos = PositionalEncoding(d_model, src_seq_len, dropout)

tgt_pos = PositionalEncoding(d_model, tgt_seq_len, dropout)

# Create the encoder blocks

encoder_blocks = []

for _ in range(N):

encoder_self_attention_block = MultiHeadAttentionBlock(d_model, h, dropout)

feed_forward_block = FeedForwardBlock(d_model, d_ff, dropout)

encoder_block = EncoderBlock(d_model, encoder_self_attention_block, feed_forward_block, dropout)

encoder_blocks.append(encoder_block)

# Create the decoder blocks

decoder_blocks = []

for _ in range(N):

decoder_self_attention_block = MultiHeadAttentionBlock(d_model, h, dropout)

decoder_cross_attention_block = MultiHeadAttentionBlock(d_model, h, dropout)

feed_forward_block = FeedForwardBlock(d_model, d_ff, dropout)

decoder_block = DecoderBlock(d_model, decoder_self_attention_block, decoder_cross_attention_block, feed_forward_block, dropout)

decoder_blocks.append(decoder_block)

# Create the encoder and decoder

encoder = Encoder(d_model, nn.ModuleList(encoder_blocks))

decoder = Decoder(d_model, nn.ModuleList(decoder_blocks))

# Create the projection layer

projection_layer = ProjectionLayer(d_model, tgt_vocab_size)

# Create the transformer

transformer = Transformer(encoder, decoder, src_embed, tgt_embed, src_pos, tgt_pos, projection_layer)

# Initialize the parameters

for p in transformer.parameters():

if p.dim() > 1:

nn.init.xavier_uniform_(p)

return transformer

Let's build the tokenizer¶

def get_all_sentences(ds, lang):

for item in ds:

yield item['translation'][lang]

def get_or_build_tokenizer(config, ds, lang):

tokenizer_path = Path(config['tokenizer_file'].format(lang))

if not Path.exists(tokenizer_path):

# Most code taken from: https://huggingface.co/docs/tokenizers/quicktour

tokenizer = Tokenizer(WordLevel(unk_token="[UNK]"))

tokenizer.pre_tokenizer = Whitespace()

trainer = WordLevelTrainer(special_tokens=["[UNK]", "[PAD]", "[SOS]", "[EOS]"], min_frequency=2)

tokenizer.train_from_iterator(get_all_sentences(ds, lang), trainer=trainer)

tokenizer.save(str(tokenizer_path))

else:

tokenizer = Tokenizer.from_file(str(tokenizer_path))

return tokenizer

The previous words should not watch future words¶

def causal_mask(size):

mask = torch.triu(torch.ones((1, size, size)), diagonal=1).type(torch.int)

return mask == 0

Get the dataset (hugging face opus_books dataset)¶

class BilingualDataset(Dataset):

def __init__(self, ds, tokenizer_src, tokenizer_tgt, src_lang, tgt_lang, seq_len):

super().__init__()

self.seq_len = seq_len

self.ds = ds

self.tokenizer_src = tokenizer_src

self.tokenizer_tgt = tokenizer_tgt

self.src_lang = src_lang

self.tgt_lang = tgt_lang

self.sos_token = torch.tensor([tokenizer_tgt.token_to_id("[SOS]")], dtype=torch.int64)

self.eos_token = torch.tensor([tokenizer_tgt.token_to_id("[EOS]")], dtype=torch.int64)

self.pad_token = torch.tensor([tokenizer_tgt.token_to_id("[PAD]")], dtype=torch.int64)

def __len__(self):

return len(self.ds)

def __getitem__(self, idx):

src_target_pair = self.ds[idx]

src_text = src_target_pair['translation'][self.src_lang]

tgt_text = src_target_pair['translation'][self.tgt_lang]

# Transform the text into tokens

enc_input_tokens = self.tokenizer_src.encode(src_text).ids

dec_input_tokens = self.tokenizer_tgt.encode(tgt_text).ids

# Add sos, eos and padding to each sentence

enc_num_padding_tokens = self.seq_len - len(enc_input_tokens) - 2 # We will add <s> and </s>

# We will only add <s>, and </s> only on the label

dec_num_padding_tokens = self.seq_len - len(dec_input_tokens) - 1

# Make sure the number of padding tokens is not negative. If it is, the sentence is too long

if enc_num_padding_tokens < 0 or dec_num_padding_tokens < 0:

raise ValueError("Sentence is too long")

# Add <s> and </s> token

encoder_input = torch.cat(

[

self.sos_token,

torch.tensor(enc_input_tokens, dtype=torch.int64),

self.eos_token,

torch.tensor([self.pad_token] * enc_num_padding_tokens, dtype=torch.int64),

],

dim=0,

)

# Add only <s> token

decoder_input = torch.cat(

[

self.sos_token,

torch.tensor(dec_input_tokens, dtype=torch.int64),

torch.tensor([self.pad_token] * dec_num_padding_tokens, dtype=torch.int64),

],

dim=0,

)

# Add only </s> token

label = torch.cat(

[

torch.tensor(dec_input_tokens, dtype=torch.int64),

self.eos_token,

torch.tensor([self.pad_token] * dec_num_padding_tokens, dtype=torch.int64),

],

dim=0,

)

# Double check the size of the tensors to make sure they are all seq_len long

assert encoder_input.size(0) == self.seq_len

assert decoder_input.size(0) == self.seq_len

assert label.size(0) == self.seq_len

return {

"encoder_input": encoder_input, # (seq_len)

"decoder_input": decoder_input, # (seq_len)

"encoder_mask": (encoder_input != self.pad_token).unsqueeze(0).unsqueeze(0).int(), # (1, 1, seq_len)

"decoder_mask": (decoder_input != self.pad_token).unsqueeze(0).int() & causal_mask(decoder_input.size(0)), # (1, seq_len) & (1, seq_len, seq_len),

"label": label, # (seq_len)

"src_text": src_text,

"tgt_text": tgt_text,

}

def get_ds(config):

# It only has the train split, so we divide it overselves

ds_raw = load_dataset(f"{config['datasource']}", f"{config['lang_src']}-{config['lang_tgt']}", split='train')

# Build tokenizers

tokenizer_src = get_or_build_tokenizer(config, ds_raw, config['lang_src'])

tokenizer_tgt = get_or_build_tokenizer(config, ds_raw, config['lang_tgt'])

# Keep 90% for training, 10% for validation

train_ds_size = int(0.9 * len(ds_raw))

val_ds_size = len(ds_raw) - train_ds_size

train_ds_raw, val_ds_raw = random_split(ds_raw, [train_ds_size, val_ds_size])

train_ds = BilingualDataset(train_ds_raw, tokenizer_src, tokenizer_tgt, config['lang_src'], config['lang_tgt'], config['seq_len'])

val_ds = BilingualDataset(val_ds_raw, tokenizer_src, tokenizer_tgt, config['lang_src'], config['lang_tgt'], config['seq_len'])

# Find the maximum length of each sentence in the source and target sentence

max_len_src = 0

max_len_tgt = 0

for item in ds_raw:

src_ids = tokenizer_src.encode(item['translation'][config['lang_src']]).ids

tgt_ids = tokenizer_tgt.encode(item['translation'][config['lang_tgt']]).ids

max_len_src = max(max_len_src, len(src_ids))

max_len_tgt = max(max_len_tgt, len(tgt_ids))

print(f'Max length of source sentence: {max_len_src}')

print(f'Max length of target sentence: {max_len_tgt}')

train_dataloader = DataLoader(train_ds, batch_size=config['batch_size'], shuffle=True)

val_dataloader = DataLoader(val_ds, batch_size=1, shuffle=True)

return train_dataloader, val_dataloader, tokenizer_src, tokenizer_tgt

Let's build the training loop¶

def greedy_decode(model, source, source_mask, tokenizer_src, tokenizer_tgt, max_len, device):

sos_idx = tokenizer_tgt.token_to_id('[SOS]')

eos_idx = tokenizer_tgt.token_to_id('[EOS]')

# Precompute the encoder output and reuse it for every step

encoder_output = model.encode(source, source_mask)

# Initialize the decoder input with the sos token

decoder_input = torch.empty(1, 1).fill_(sos_idx).type_as(source).to(device)

while True:

if decoder_input.size(1) == max_len:

break

# build mask for target

decoder_mask = causal_mask(decoder_input.size(1)).type_as(source_mask).to(device)

# calculate output

out = model.decode(encoder_output, source_mask, decoder_input, decoder_mask)

# get next token

prob = model.project(out[:, -1])

_, next_word = torch.max(prob, dim=1)

decoder_input = torch.cat(

[decoder_input, torch.empty(1, 1).type_as(source).fill_(next_word.item()).to(device)], dim=1

)

if next_word == eos_idx:

break

return decoder_input.squeeze(0)

def run_validation(model, validation_ds, tokenizer_src, tokenizer_tgt, max_len, device, print_msg, global_step, writer, num_examples=2):

model.eval()

count = 0

source_texts = []

expected = []

predicted = []

try:

# get the console window width

with os.popen('stty size', 'r') as console:

_, console_width = console.read().split()

console_width = int(console_width)

except:

# If we can't get the console width, use 80 as default

console_width = 80

with torch.no_grad():

for batch in validation_ds:

count += 1

encoder_input = batch["encoder_input"].to(device) # (b, seq_len)

encoder_mask = batch["encoder_mask"].to(device) # (b, 1, 1, seq_len)

# check that the batch size is 1

assert encoder_input.size(

0) == 1, "Batch size must be 1 for validation"

model_out = greedy_decode(model, encoder_input, encoder_mask, tokenizer_src, tokenizer_tgt, max_len, device)

source_text = batch["src_text"][0]

target_text = batch["tgt_text"][0]

model_out_text = tokenizer_tgt.decode(model_out.detach().cpu().numpy())

source_texts.append(source_text)

expected.append(target_text)

predicted.append(model_out_text)

# Print the source, target and model output

print_msg('-'*console_width)

print_msg(f"{f'SOURCE: ':>12}{source_text}")

print_msg(f"{f'TARGET: ':>12}{target_text}")

print_msg(f"{f'PREDICTED: ':>12}{model_out_text}")

if count == num_examples:

print_msg('-'*console_width)

break

if writer:

# Evaluate the character error rate

# Compute the char error rate

metric = torchmetrics.CharErrorRate()

cer = metric(predicted, expected)

writer.add_scalar('validation cer', cer, global_step)

writer.flush()

# Compute the word error rate

metric = torchmetrics.WordErrorRate()

wer = metric(predicted, expected)

writer.add_scalar('validation wer', wer, global_step)

writer.flush()

# Compute the BLEU metric

metric = torchmetrics.BLEUScore()

bleu = metric(predicted, expected)

writer.add_scalar('validation BLEU', bleu, global_step)

writer.flush()

def get_model(config, vocab_src_len, vocab_tgt_len):

model = build_transformer(vocab_src_len, vocab_tgt_len, config["seq_len"], config['seq_len'], d_model=config['d_model'])

return model

def train_model(config):

# Define the device

device = "cuda" if torch.cuda.is_available() else "mps" if torch.has_mps or torch.backends.mps.is_available() else "cpu"

print("Using device:", device)

if (device == 'cuda'):

print(f"Device name: {torch.cuda.get_device_name(device.index)}")

print(f"Device memory: {torch.cuda.get_device_properties(device.index).total_memory / 1024 ** 3} GB")

elif (device == 'mps'):

print(f"Device name: <mps>")

else:

print("NOTE: If you have a GPU, consider using it for training.")

print(" On a Windows machine with NVidia GPU, check this video: https://www.youtube.com/watch?v=GMSjDTU8Zlc")

print(" On a Mac machine, run: pip3 install --pre torch torchvision torchaudio torchtext --index-url https://download.pytorch.org/whl/nightly/cpu")

device = torch.device(device)

# Make sure the weights folder exists

Path(f"{config['datasource']}_{config['model_folder']}").mkdir(parents=True, exist_ok=True)

train_dataloader, val_dataloader, tokenizer_src, tokenizer_tgt = get_ds(config)

model = get_model(config, tokenizer_src.get_vocab_size(), tokenizer_tgt.get_vocab_size()).to(device)

# Tensorboard

writer = SummaryWriter(config['experiment_name'])

optimizer = torch.optim.Adam(model.parameters(), lr=config['lr'], eps=1e-9)

# If the user specified a model to preload before training, load it

initial_epoch = 0

global_step = 0

preload = config['preload']

model_filename = latest_weights_file_path(config) if preload == 'latest' else get_weights_file_path(config, preload) if preload else None

if model_filename:

print(f'Preloading model {model_filename}')

state = torch.load(model_filename)

model.load_state_dict(state['model_state_dict'])

initial_epoch = state['epoch'] + 1

optimizer.load_state_dict(state['optimizer_state_dict'])

global_step = state['global_step']

else:

print('No model to preload, starting from scratch')

loss_fn = nn.CrossEntropyLoss(ignore_index=tokenizer_src.token_to_id('[PAD]'), label_smoothing=0.1).to(device)

for epoch in range(initial_epoch, config['num_epochs']):

torch.cuda.empty_cache()

model.train()

batch_iterator = tqdm(train_dataloader, desc=f"Processing Epoch {epoch:02d}")

for batch in batch_iterator:

encoder_input = batch['encoder_input'].to(device) # (b, seq_len)

decoder_input = batch['decoder_input'].to(device) # (B, seq_len)

encoder_mask = batch['encoder_mask'].to(device) # (B, 1, 1, seq_len)

decoder_mask = batch['decoder_mask'].to(device) # (B, 1, seq_len, seq_len)

# Run the tensors through the encoder, decoder and the projection layer

encoder_output = model.encode(encoder_input, encoder_mask) # (B, seq_len, d_model)

decoder_output = model.decode(encoder_output, encoder_mask, decoder_input, decoder_mask) # (B, seq_len, d_model)

proj_output = model.project(decoder_output) # (B, seq_len, vocab_size)

# Compare the output with the label

label = batch['label'].to(device) # (B, seq_len)

# Compute the loss using a simple cross entropy

loss = loss_fn(proj_output.view(-1, tokenizer_tgt.get_vocab_size()), label.view(-1))

batch_iterator.set_postfix({"loss": f"{loss.item():6.3f}"})

# Log the loss

writer.add_scalar('train loss', loss.item(), global_step)

writer.flush()

# Backpropagate the loss

loss.backward()

# Update the weights

optimizer.step()

optimizer.zero_grad(set_to_none=True)

global_step += 1

# Run validation at the end of every epoch

run_validation(model, val_dataloader, tokenizer_src, tokenizer_tgt, config['seq_len'], device, lambda msg: batch_iterator.write(msg), global_step, writer)

# Save the model at the end of every epoch

model_filename = get_weights_file_path(config, f"{epoch:02d}")

torch.save({

'epoch': epoch,

'model_state_dict': model.state_dict(),

'optimizer_state_dict': optimizer.state_dict(),

'global_step': global_step

}, model_filename)

Train it¶

config = get_config()

train_model(config)

/var/folders/59/c32_bthx48jd9m2ym5m3tnpw0000j7/T/ipykernel_80099/2082591957.py:105: UserWarning: 'has_mps' is deprecated, please use 'torch.backends.mps.is_built()' device = "cuda" if torch.cuda.is_available() else "mps" if torch.has_mps or torch.backends.mps.is_available() else "cpu"

Using device: mps Device name: <mps> Max length of source sentence: 309 Max length of target sentence: 274 Preloading model opus_books_weights/tmodel_02.pt

Processing Epoch 03: 100%|██████████| 3638/3638 [7:29:05<00:00, 7.41s/it, loss=4.998] huggingface/tokenizers: The current process just got forked, after parallelism has already been used. Disabling parallelism to avoid deadlocks... To disable this warning, you can either: - Avoid using `tokenizers` before the fork if possible - Explicitly set the environment variable TOKENIZERS_PARALLELISM=(true | false) stty: stdin isn't a terminal

--------------------------------------------------------------------------------

SOURCE: "Hi! look at your nose."

TARGET: — Ehi, guardate il vostro naso.

PREDICTED: — Ah ! — esclamò il Re .

--------------------------------------------------------------------------------

SOURCE: 'When shall we meet?' asked Varenka.

TARGET: — Quando ci vediamo? — chiese Varen’ka.

PREDICTED: — Quando andiamo ? — disse Varen ’ ka .

--------------------------------------------------------------------------------

/Users/LSoica/work/AI/blog/.venv/lib/python3.12/site-packages/torchmetrics/utilities/prints.py:62: FutureWarning: Importing `CharErrorRate` from `torchmetrics` was deprecated and will be removed in 2.0. Import `CharErrorRate` from `torchmetrics.text` instead. _future_warning( /Users/LSoica/work/AI/blog/.venv/lib/python3.12/site-packages/torchmetrics/utilities/prints.py:62: FutureWarning: Importing `WordErrorRate` from `torchmetrics` was deprecated and will be removed in 2.0. Import `WordErrorRate` from `torchmetrics.text` instead. _future_warning( /Users/LSoica/work/AI/blog/.venv/lib/python3.12/site-packages/torchmetrics/utilities/prints.py:62: FutureWarning: Importing `BLEUScore` from `torchmetrics` was deprecated and will be removed in 2.0. Import `BLEUScore` from `torchmetrics.text` instead. _future_warning( Processing Epoch 04: 100%|██████████| 3638/3638 [1:18:02<00:00, 1.29s/it, loss=4.488] huggingface/tokenizers: The current process just got forked, after parallelism has already been used. Disabling parallelism to avoid deadlocks... To disable this warning, you can either: - Avoid using `tokenizers` before the fork if possible - Explicitly set the environment variable TOKENIZERS_PARALLELISM=(true | false) stty: stdin isn't a terminal

--------------------------------------------------------------------------------

SOURCE: The other is not work for the Nobility.

TARGET: Altrimenti non è lavoro da nobili.

PREDICTED: Il lavoro non è nulla di .

--------------------------------------------------------------------------------

SOURCE: When I again unclosed my eyes, a loud bell was ringing; the girls were up and dressing; day had not yet begun to dawn, and a rushlight or two burned in the room.

TARGET: Il giorno non era ancora spuntato e un paio di lumi erano accesi nel dormitorio.

PREDICTED: Quando mi fermai .

--------------------------------------------------------------------------------

Processing Epoch 05: 100%|██████████| 3638/3638 [1:19:53<00:00, 1.32s/it, loss=4.686] huggingface/tokenizers: The current process just got forked, after parallelism has already been used. Disabling parallelism to avoid deadlocks... To disable this warning, you can either: - Avoid using `tokenizers` before the fork if possible - Explicitly set the environment variable TOKENIZERS_PARALLELISM=(true | false) stty: stdin isn't a terminal

--------------------------------------------------------------------------------

SOURCE: 'No, I didn't,' said Alice: 'I don't think it's at all a pity. I said "What for?"'

TARGET: — Ma no, — rispose Alice: — Ho detto per che reato?

PREDICTED: — No , no , — disse Alice , — non credo che sia una donna .

--------------------------------------------------------------------------------

SOURCE: And the best thing she did was to leave that half-witted brother-in-law of yours!

TARGET: E ha fatto ancora meglio, perché ha abbandonato quel pazzo di vostro cognato.

PREDICTED: E per quanto è la cosa , che è qui , , la tua salute .

--------------------------------------------------------------------------------

Processing Epoch 06: 100%|██████████| 3638/3638 [1:24:18<00:00, 1.39s/it, loss=4.335] huggingface/tokenizers: The current process just got forked, after parallelism has already been used. Disabling parallelism to avoid deadlocks... To disable this warning, you can either: - Avoid using `tokenizers` before the fork if possible - Explicitly set the environment variable TOKENIZERS_PARALLELISM=(true | false) stty: stdin isn't a terminal

--------------------------------------------------------------------------------

SOURCE: Levin smiled, tightening his arm, and under Oblonsky's fingers a lump like a Dutch cheese and hard as steel bulged out beneath the fine cloth of Levin's coat.

TARGET: Levin sorrise, tese il braccio e, sotto le dita di Stepan Arkad’ic, come un rotondo formaggio, si sollevò, al di sotto del panno sottile della finanziera, una massa di acciaio.

PREDICTED: Levin sorrise , il braccio e con le mani di Stepan Arkad ’ ic , con le dita come un di e di , come un di velluto di velluto , di Levin .

--------------------------------------------------------------------------------

SOURCE: There, I had a friend's face under my gaze; and what did it signify that those young ladies turned their backs on me?

TARGET: Non m'importava nulla che le ragazze mi voltassero le spalle; avevo una faccia amica dinanzi a me.

PREDICTED: Vi ho già un amico di mio , e che cosa mi aveva detto che i bambini mi avevano detto che le signore si .

--------------------------------------------------------------------------------

Processing Epoch 07: 100%|██████████| 3638/3638 [1:18:23<00:00, 1.29s/it, loss=4.157] huggingface/tokenizers: The current process just got forked, after parallelism has already been used. Disabling parallelism to avoid deadlocks... To disable this warning, you can either: - Avoid using `tokenizers` before the fork if possible - Explicitly set the environment variable TOKENIZERS_PARALLELISM=(true | false) stty: stdin isn't a terminal

--------------------------------------------------------------------------------

SOURCE: But I'll go and wash.'

TARGET: Ma ecco, vado a lavarmi.

PREDICTED: Ma io andrò a .

--------------------------------------------------------------------------------

SOURCE: I noticed, as the song progressed, that a good many other people seemed to have their eye fixed on the two young men, as well as myself.

TARGET: M’accorsi, mentre la canzonetta continuava, che molti altri fissavano i due giovani, seguendo il mio sguardo.

PREDICTED: Mi accorsi che il pianoforte si un altro , che si le due persone che mi circondavano .

--------------------------------------------------------------------------------

Processing Epoch 08: 100%|██████████| 3638/3638 [1:23:21<00:00, 1.37s/it, loss=3.828] huggingface/tokenizers: The current process just got forked, after parallelism has already been used. Disabling parallelism to avoid deadlocks... To disable this warning, you can either: - Avoid using `tokenizers` before the fork if possible - Explicitly set the environment variable TOKENIZERS_PARALLELISM=(true | false) stty: stdin isn't a terminal

--------------------------------------------------------------------------------

SOURCE: Helen she held a little longer than me: she let her go more reluctantly; it was Helen her eye followed to the door; it was for her she a second time breathed a sad sigh; for her she wiped a tear from her cheek.

TARGET: Trattenne Elena stretta al cuore un poco più di me, se ne staccò più difficilmente e la seguì con l'occhio, e per lei sospirò e si rasciugò una lagrima.

PREDICTED: Elena mi fece un poco più poco più di più , e riprese a guardarla con un ' occhiata .

--------------------------------------------------------------------------------

SOURCE: They do not know how at every step he hurt me and remained self-satisfied.

TARGET: Non sanno che in ogni occasione mi ha umiliato, compiacendosene.

PREDICTED: Non sa come a quanto a quanto a quanto a me , e a volte mi .

--------------------------------------------------------------------------------

Processing Epoch 09: 100%|██████████| 3638/3638 [1:51:15<00:00, 1.83s/it, loss=3.634] Python(16405) MallocStackLogging: can't turn off malloc stack logging because it was not enabled. huggingface/tokenizers: The current process just got forked, after parallelism has already been used. Disabling parallelism to avoid deadlocks... To disable this warning, you can either: - Avoid using `tokenizers` before the fork if possible - Explicitly set the environment variable TOKENIZERS_PARALLELISM=(true | false) stty: stdin isn't a terminal

--------------------------------------------------------------------------------

SOURCE: 'That means that she has again not slept all night,' he thought.

TARGET: Stepan Arkad’ic sospirò. «Già; non avrà dormito tutta la notte» pensò.

PREDICTED: “ Questa è di nuovo che non è tornata a lei — pensava .

--------------------------------------------------------------------------------

SOURCE: No one ever convinces another.'

TARGET: Tanto nessuno mai convincerà l’altro.

PREDICTED: Nessuno può mai avere un ’ altra .

--------------------------------------------------------------------------------

And infer the trained model¶

def translate(sentence: str):

# Define the device, tokenizers, and model

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

print("Using device:", device)

config = get_config()

tokenizer_src = Tokenizer.from_file(str(Path(config['tokenizer_file'].format(config['lang_src']))))

tokenizer_tgt = Tokenizer.from_file(str(Path(config['tokenizer_file'].format(config['lang_tgt']))))

model = build_transformer(tokenizer_src.get_vocab_size(), tokenizer_tgt.get_vocab_size(), config["seq_len"], config['seq_len'], d_model=config['d_model']).to(device)

# Load the pretrained weights

model_filename = latest_weights_file_path(config)

state = torch.load(model_filename)

model.load_state_dict(state['model_state_dict'])

# if the sentence is a number use it as an index to the test set

label = ""

if type(sentence) == int or sentence.isdigit():

id = int(sentence)

ds = load_dataset(f"{config['datasource']}", f"{config['lang_src']}-{config['lang_tgt']}", split='all')

ds = BilingualDataset(ds, tokenizer_src, tokenizer_tgt, config['lang_src'], config['lang_tgt'], config['seq_len'])

sentence = ds[id]['src_text']

label = ds[id]["tgt_text"]

seq_len = config['seq_len']

# translate the sentence

model.eval()

with torch.no_grad():

# Precompute the encoder output and reuse it for every generation step

source = tokenizer_src.encode(sentence)

source = torch.cat([

torch.tensor([tokenizer_src.token_to_id('[SOS]')], dtype=torch.int64),

torch.tensor(source.ids, dtype=torch.int64),

torch.tensor([tokenizer_src.token_to_id('[EOS]')], dtype=torch.int64),

torch.tensor([tokenizer_src.token_to_id('[PAD]')] * (seq_len - len(source.ids) - 2), dtype=torch.int64)

], dim=0).to(device)

source_mask = (source != tokenizer_src.token_to_id('[PAD]')).unsqueeze(0).unsqueeze(0).int().to(device)

encoder_output = model.encode(source, source_mask)

# Initialize the decoder input with the sos token

decoder_input = torch.empty(1, 1).fill_(tokenizer_tgt.token_to_id('[SOS]')).type_as(source).to(device)

# Print the source sentence and target start prompt

if label != "": print(f"{f'ID: ':>12}{id}")

print(f"{f'SOURCE: ':>12}{sentence}")

if label != "": print(f"{f'TARGET: ':>12}{label}")

print(f"{f'PREDICTED: ':>12}", end='')

# Generate the translation word by word

while decoder_input.size(1) < seq_len:

# build mask for target and calculate output

decoder_mask = torch.triu(torch.ones((1, decoder_input.size(1), decoder_input.size(1))), diagonal=1).type(torch.int).type_as(source_mask).to(device)

out = model.decode(encoder_output, source_mask, decoder_input, decoder_mask)

# project next token

prob = model.project(out[:, -1])

_, next_word = torch.max(prob, dim=1)

decoder_input = torch.cat([decoder_input, torch.empty(1, 1).type_as(source).fill_(next_word.item()).to(device)], dim=1)

# print the translated word

print(f"{tokenizer_tgt.decode([next_word.item()])}", end=' ')

# break if we predict the end of sentence token

if next_word == tokenizer_tgt.token_to_id('[EOS]'):

break

# convert ids to tokens

return tokenizer_tgt.decode(decoder_input[0].tolist())

#read sentence from argument

translate(sys.argv[1] if len(sys.argv) > 1 else "I am not a very good a student.")

Using device: cpu

SOURCE: --f=/Users/LSoica/Library/Jupyter/runtime/kernel-v3e64c2d59ad927737df05cc8dc8916632f1f67d53.json

PREDICTED: Buona Bianca Bianca à à vino

'Buona Bianca Bianca à à vino'

Visualize the attention heads¶

import pandas as pd

import altair as alt

config = get_config()

train_dataloader, val_dataloader, vocab_src, vocab_tgt = get_ds(config)

model = get_model(config, vocab_src.get_vocab_size(), vocab_tgt.get_vocab_size()).to(device)

# Load the pretrained weights

model_filename = get_weights_file_path(config, f"00")

state = torch.load(model_filename)

model.load_state_dict(state['model_state_dict'])

def mtx2df(m, max_row, max_col, row_tokens, col_tokens):

return pd.DataFrame(

[

(

r,

c,

float(m[r, c]),

"%.3d %s" % (r, row_tokens[r] if len(row_tokens) > r else "<blank>"),

"%.3d %s" % (c, col_tokens[c] if len(col_tokens) > c else "<blank>"),

)

for r in range(m.shape[0])

for c in range(m.shape[1])

if r < max_row and c < max_col

],

columns=["row", "column", "value", "row_token", "col_token"],

)

def get_attn_map(attn_type: str, layer: int, head: int):

if attn_type == "encoder":

attn = model.encoder.layers[layer].self_attention_block.attention_scores

elif attn_type == "decoder":

attn = model.decoder.layers[layer].self_attention_block.attention_scores

elif attn_type == "encoder-decoder":

attn = model.decoder.layers[layer].cross_attention_block.attention_scores

return attn[0, head].data

def attn_map(attn_type, layer, head, row_tokens, col_tokens, max_sentence_len):

df = mtx2df(

get_attn_map(attn_type, layer, head),

max_sentence_len,

max_sentence_len,

row_tokens,

col_tokens,

)

return (

alt.Chart(data=df)

.mark_rect()

.encode(

x=alt.X("col_token", axis=alt.Axis(title="")),

y=alt.Y("row_token", axis=alt.Axis(title="")),

color="value",

tooltip=["row", "column", "value", "row_token", "col_token"],

)

#.title(f"Layer {layer} Head {head}")

.properties(height=400, width=400, title=f"Layer {layer} Head {head}")

.interactive()

)

def get_all_attention_maps(attn_type: str, layers: list[int], heads: list[int], row_tokens: list, col_tokens, max_sentence_len: int):

charts = []

for layer in layers:

rowCharts = []

for head in heads:

rowCharts.append(attn_map(attn_type, layer, head, row_tokens, col_tokens, max_sentence_len))

charts.append(alt.hconcat(*rowCharts))

return alt.vconcat(*charts)

def load_next_batch():

# Load a sample batch from the validation set

batch = next(iter(val_dataloader))

encoder_input = batch["encoder_input"].to(device)

encoder_mask = batch["encoder_mask"].to(device)

decoder_input = batch["decoder_input"].to(device)

decoder_mask = batch["decoder_mask"].to(device)

encoder_input_tokens = [vocab_src.id_to_token(idx) for idx in encoder_input[0].cpu().numpy()]

decoder_input_tokens = [vocab_tgt.id_to_token(idx) for idx in decoder_input[0].cpu().numpy()]

# check that the batch size is 1

assert encoder_input.size(

0) == 1, "Batch size must be 1 for validation"

model_out = greedy_decode(

model, encoder_input, encoder_mask, vocab_src, vocab_tgt, config['seq_len'], device)

return batch, encoder_input_tokens, decoder_input_tokens

Using the latest cached version of the dataset since opus_books couldn't be found on the Hugging Face Hub Found the latest cached dataset configuration 'en-it' at /Users/LSoica/.cache/huggingface/datasets/opus_books/en-it/0.0.0/1f9f6191d0e91a3c539c2595e2fe48fc1420de9b (last modified on Tue Oct 15 14:24:02 2024).

Max length of source sentence: 309 Max length of target sentence: 274

batch, encoder_input_tokens, decoder_input_tokens = load_next_batch()

print(f'Source: {batch["src_text"][0]}')

print(f'Target: {batch["tgt_text"][0]}')

sentence_len = encoder_input_tokens.index("[PAD]")

layers = [0, 1, 2]

heads = [0, 1, 2, 3, 4, 5, 6, 7]

# Encoder Self-Attention

get_all_attention_maps("encoder", layers, heads, encoder_input_tokens, encoder_input_tokens, min(20, sentence_len))

Source: Were I not morally certain that your uncle will be dead ere you reach Madeira, I would advise you to accompany Mr. Mason back; but as it is, I think you had better remain in England till you can hear further, either from or of Mr. Eyre. Target: "Se non fossi sicuro che vostro zio non morisse prima che voi aveste il tempo di giungere a Madera, vi consiglierei di partire col signor Mason; ma, nello stato presente delle cose, credo che fareste meglio di rimanere in Inghilterra finché non abbiate notizie del signor Eyre.

Improvements¶

- When cuda is available, increase the batch size to let's say 32. In my case the batch size is 8, that's how much room I have with MPS. And this introduces noise. When a higher batch size is used, althout the input data points are independent during forward and backward pass, the gradient is aggregated over the entire batch, and then the gradient descent is only performed after the entire batch was processed.

- Increase the embedding vector size. This leaves room for more features to be extracted.

- Run more epochs. I have only trained the model for 8 epoch.

References¶

Coding a Transformer from scratch on PyTorch, with full explanation, training and inference.