Fine-tuning a LLM on your own docs¶

Natural Language Processing¶

Text classification: the model is trained to predict a label for a given text. Text classification is frequently used for tasks like sentiment analysis, topic classification, and spam detection.

Token classification: the model is trained to predict a label for each token in the sequence. Token classification is frequently used for tasks like named entity recognition (NER), part-of-speech tagging, and chunking.

Question answering: the model is trained to predict an answer to a question based on a given context. Question answering is frequently used for tasks like question answering, fact verification, and conversational response generation.

Causal language modeling: the model is trained to predict the next token in the sequence. Causal language models are frequently used for text generation.

Masked language modeling: the model is trained to predict the masked tokens in the sequence. Masked language model can attend to tokens bidirectionally. This means the model has full access to the tokens on the left and right. Masked language modeling is great for tasks that require a good contextual understanding of an entire sequence. BERT is an example of a masked language model.

Translation: the model is trained to translate text from one language to another. Translation is frequently used for tasks like machine translation.

Summarization: the model is trained to summarize a given text. Summarization is frequently used for tasks like news summarization, article summarization, and book summarization.

Multiple choice: the model is trained to predict the correct answer from a list of multiple choice options.

The goal of this post is to fine-tune a LLM on a custom dataset for causal language modeling. We will use the DistilGPT2 model. DistilGPT2 (short for Distilled-GPT2) is an English-language model pre-trained with the supervision of the smallest version of Generative Pre-trained Transformer 2 (GPT-2). Like GPT-2, DistilGPT2 can be used to generate text. Users of this model card should also consider information about the design, training, and limitations of GPT-2.

Hugging Face Datasets¶

Datasets is a library for easily accessing and sharing datasets for Audio, Computer Vision, and Natural Language Processing (NLP) tasks. Backed by the Apache Arrow format, process large datasets with zero-copy reads without any memory constraints for optimal speed and efficiency.

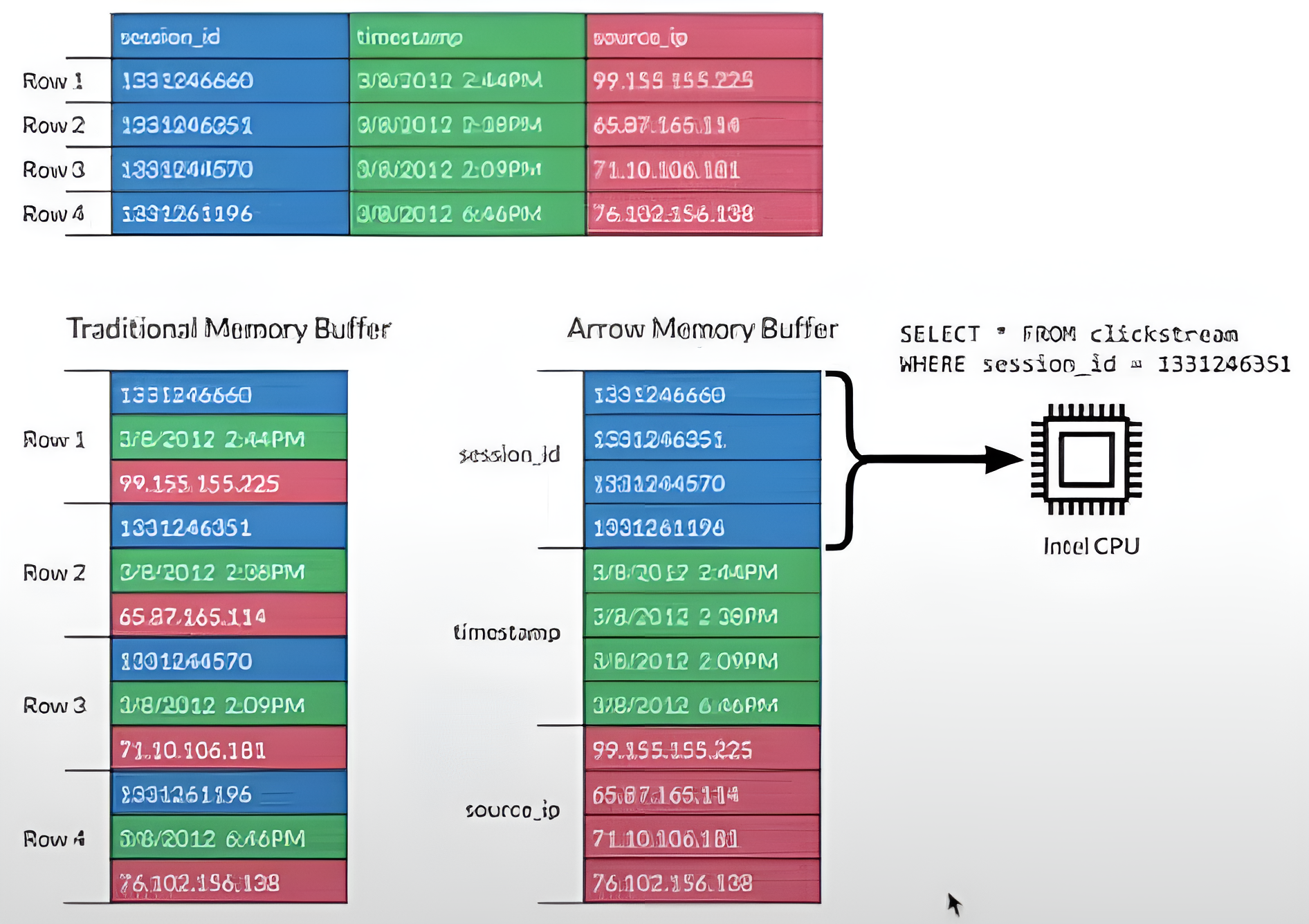

Apache Arrow is a universal columnar format and multi-language toolbox for fast data interchange and in-memory analytics. What is a columnar format?

A columnar format stores data in columns, which are arrays of data. Each column contains data of the same type. Columnar formats are efficient for processing large datasets because they allow for efficient data access and processing.

RDBMS stores data row-wise, which means that each row is stored sequentially in memory. By contrast, columnar formats store data column-wise, which means that each column is stored sequentially in memory. While RDBMs is suited for OLTP (Online Transaction Processing), banking, retail, columnar formats are suited for OLAP (Online Analytical Processing), analytics, data warehousing (Hadoop, Spark).

Apache Parquet is a columnar storage format that is widely used in the Hadoop ecosystem. What is the difference then between Parquet and Arrow? Arrow is a memory format, while Parquet is a file format. Arrow is a multi-language toolbox, while Parquet is a storage format. Parquet data cannot be directly operated on, instead it must be first decoded in chunks.

Apache Arrow contains a set of technologies that enable data systems to efficiently store, process, and move data:

- Arrow IPC/Serialization: a binary format for fast and efficient data interchange and in-memory analytics.

- Arrow Flight and Flight SQL: High volume transport protocol and SQL-on-Arrow for scalable in-memory analytics.

- Arrow Memory Pool: a memory allocator for efficient memory management and garbage collection.

Peft¶

Peft is a library that allows you to use LoRA (Low-Rank Adaptation) to fine-tune LLMs.

Install libraries¶

%pip install -U transformers torch tqdm tiktoken markdown

Load the data¶

Let's load the ELI5 dataset from the Hugging Face Hub first. Next, we split the dataset into a training and test set, train the model on the training set, evaluate the model on the test set, inference the model on a custom prompt.

Next, we fine-tune the model on our own dataset.

from datasets import load_dataset

eli5 = load_dataset("eli5_category", split="train[:5000]")

eli5 = eli5.train_test_split(test_size=0.2)

/Users/LSoica/work/AI/blog/.venv/lib/python3.12/site-packages/tqdm/auto.py:21: TqdmWarning: IProgress not found. Please update jupyter and ipywidgets. See https://ipywidgets.readthedocs.io/en/stable/user_install.html from .autonotebook import tqdm as notebook_tqdm Downloading data: 100%|██████████| 62.3M/62.3M [00:00<00:00, 66.7MB/s] Downloading data: 100%|██████████| 5.00M/5.00M [00:00<00:00, 90.5MB/s] Downloading data: 100%|██████████| 1.76M/1.76M [00:00<00:00, 110MB/s] Downloading data: 100%|██████████| 3.85M/3.85M [00:00<00:00, 95.6MB/s] Generating train split: 100%|██████████| 91772/91772 [00:03<00:00, 23245.99 examples/s] Generating validation1 split: 100%|██████████| 5446/5446 [00:00<00:00, 26980.41 examples/s] Generating validation2 split: 100%|██████████| 2375/2375 [00:00<00:00, 28917.25 examples/s] Generating test split: 100%|██████████| 5411/5411 [00:00<00:00, 29139.00 examples/s]

Preprocess¶

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("distilbert/distilgpt2")

eli5 = eli5.flatten()

eli5["train"][0]

{'q_id': '7h2ns1',

'title': 'How does different data traveling on the same cable not get lost with all the other data?',

'selftext': '',

'category': 'Technology',

'subreddit': 'explainlikeimfive',

'answers.a_id': ['dqnmb02'],

'answers.text': ['Data is encapsulated into packets with headers and trailers that identify it. Sometimes it does get lost though. When 2 devices establish a connection, they decide on packet numbering. If I send you a packet that says it contains data 1500 - 1600 you expect that my next packet starts with 1700. If it doesn’t, then you your response to me is essentially “I need 1700”.'],

'answers.score': [10],

'answers.text_urls': [[]],

'title_urls': ['url'],

'selftext_urls': ['url']}

def preprocess_function(examples):

return tokenizer([" ".join(x) for x in examples["answers.text"]])

tokenized_eli5 = eli5.map(

preprocess_function,

batched=True,

num_proc=4,

remove_columns=eli5["train"].column_names,

)

block_size = 128

def group_texts(examples):

# Concatenate all texts.

concatenated_examples = {k: sum(examples[k], []) for k in examples.keys()}

total_length = len(concatenated_examples[list(examples.keys())[0]])

# We drop the small remainder, we could add padding if the model supported it instead of this drop, you can

# customize this part to your needs.

if total_length >= block_size:

total_length = (total_length // block_size) * block_size

# Split by chunks of block_size.

result = {

k: [t[i : i + block_size] for i in range(0, total_length, block_size)]

for k, t in concatenated_examples.items()

}

result["labels"] = result["input_ids"].copy()

return result

lm_dataset = tokenized_eli5.map(group_texts, batched=True, num_proc=4)

from transformers import DataCollatorForLanguageModeling

tokenizer.pad_token = tokenizer.eos_token

data_collator = DataCollatorForLanguageModeling(tokenizer=tokenizer, mlm=False)

Map (num_proc=4): 0%| | 0/4000 [00:00<?, ? examples/s]Token indices sequence length is longer than the specified maximum sequence length for this model (1209 > 1024). Running this sequence through the model will result in indexing errors Token indices sequence length is longer than the specified maximum sequence length for this model (1186 > 1024). Running this sequence through the model will result in indexing errors Token indices sequence length is longer than the specified maximum sequence length for this model (2070 > 1024). Running this sequence through the model will result in indexing errors Token indices sequence length is longer than the specified maximum sequence length for this model (1601 > 1024). Running this sequence through the model will result in indexing errors Map (num_proc=4): 100%|██████████| 4000/4000 [00:00<00:00, 4995.93 examples/s] Map (num_proc=4): 0%| | 0/1000 [00:00<?, ? examples/s]Token indices sequence length is longer than the specified maximum sequence length for this model (1246 > 1024). Running this sequence through the model will result in indexing errors Token indices sequence length is longer than the specified maximum sequence length for this model (1477 > 1024). Running this sequence through the model will result in indexing errors Token indices sequence length is longer than the specified maximum sequence length for this model (2922 > 1024). Running this sequence through the model will result in indexing errors Map (num_proc=4): 25%|██▌ | 250/1000 [00:00<00:00, 1413.37 examples/s]Token indices sequence length is longer than the specified maximum sequence length for this model (2575 > 1024). Running this sequence through the model will result in indexing errors Map (num_proc=4): 100%|██████████| 1000/1000 [00:00<00:00, 3896.43 examples/s] Map (num_proc=4): 100%|██████████| 4000/4000 [00:01<00:00, 3049.55 examples/s] Map (num_proc=4): 100%|██████████| 1000/1000 [00:00<00:00, 5714.65 examples/s]

Train¶

from transformers import AutoModelForCausalLM, TrainingArguments, Trainer

model = AutoModelForCausalLM.from_pretrained("distilbert/distilgpt2")

training_args = TrainingArguments(

output_dir="my_awesome_eli5_clm-model",

eval_strategy="epoch",

learning_rate=2e-5,

weight_decay=0.01,

push_to_hub=True,

)

trainer = Trainer(

model=model,

args=training_args,

train_dataset=lm_dataset["train"],

eval_dataset=lm_dataset["test"],

data_collator=data_collator,

tokenizer=tokenizer,

)

trainer.train()

/var/folders/59/c32_bthx48jd9m2ym5m3tnpw0000j7/T/ipykernel_46283/2004268791.py:13: FutureWarning: `tokenizer` is deprecated and will be removed in version 5.0.0 for `Trainer.__init__`. Use `processing_class` instead. trainer = Trainer( 13%|█▎ | 500/3975 [04:26<30:20, 1.91it/s]

{'loss': 3.9787, 'grad_norm': 4.818574905395508, 'learning_rate': 1.748427672955975e-05, 'epoch': 0.38}

25%|██▌ | 1000/3975 [08:52<25:56, 1.91it/s]

{'loss': 3.9528, 'grad_norm': 3.8537325859069824, 'learning_rate': 1.4968553459119497e-05, 'epoch': 0.75}

33%|███▎ | 1325/3975 [12:26<23:20, 1.89it/s]

{'eval_loss': 3.8328182697296143, 'eval_runtime': 41.027, 'eval_samples_per_second': 60.497, 'eval_steps_per_second': 7.58, 'epoch': 1.0}

38%|███▊ | 1500/3975 [14:00<22:34, 1.83it/s]

{'loss': 3.913, 'grad_norm': 3.8344168663024902, 'learning_rate': 1.2452830188679246e-05, 'epoch': 1.13}

50%|█████ | 2000/3975 [18:25<16:49, 1.96it/s]

{'loss': 3.8573, 'grad_norm': 4.064958572387695, 'learning_rate': 9.937106918238994e-06, 'epoch': 1.51}

63%|██████▎ | 2500/3975 [22:48<13:05, 1.88it/s]

{'loss': 3.8496, 'grad_norm': 4.159275531768799, 'learning_rate': 7.421383647798742e-06, 'epoch': 1.89}

67%|██████▋ | 2650/3975 [24:51<11:36, 1.90it/s]

{'eval_loss': 3.823622941970825, 'eval_runtime': 41.3614, 'eval_samples_per_second': 60.008, 'eval_steps_per_second': 7.519, 'epoch': 2.0}

75%|███████▌ | 3000/3975 [27:54<08:24, 1.93it/s]

{'loss': 3.83, 'grad_norm': 3.96323823928833, 'learning_rate': 4.905660377358491e-06, 'epoch': 2.26}

88%|████████▊ | 3500/3975 [32:22<04:15, 1.86it/s]

{'loss': 3.8126, 'grad_norm': 4.447389125823975, 'learning_rate': 2.389937106918239e-06, 'epoch': 2.64}

100%|██████████| 3975/3975 [37:30<00:00, 1.77it/s]

{'eval_loss': 3.8222262859344482, 'eval_runtime': 52.5303, 'eval_samples_per_second': 47.249, 'eval_steps_per_second': 5.92, 'epoch': 3.0}

{'train_runtime': 2250.3542, 'train_samples_per_second': 14.131, 'train_steps_per_second': 1.766, 'train_loss': 3.8763028203616354, 'epoch': 3.0}

TrainOutput(global_step=3975, training_loss=3.8763028203616354, metrics={'train_runtime': 2250.3542, 'train_samples_per_second': 14.131, 'train_steps_per_second': 1.766, 'total_flos': 1038654583603200.0, 'train_loss': 3.8763028203616354, 'epoch': 3.0})

Evaluate¶

import math

eval_results = trainer.evaluate()

print(f"Perplexity: {math.exp(eval_results['eval_loss']):.2f}")

100%|██████████| 311/311 [00:40<00:00, 7.62it/s]

Perplexity: 45.71

Inference¶

prompt = "Somatic hypermutation allows the immune system to"

from transformers import pipeline

generator = pipeline("text-generation", model="my_awesome_eli5_clm-model")

generator(prompt)

Hardware accelerator e.g. GPU is available in the environment, but no `device` argument is passed to the `Pipeline` object. Model will be on CPU.

[{'generated_text': 'Somatic hypermutation allows the immune system to adapt to a more drastic response. When the immune system is overwhelmed, the immune system does NOT adapt to new stimuli (an event) and does not respond to new stimuli.This technique is called hyp'}]

Build our own dataset¶

from datasets import load_dataset

own_dataset = load_dataset("text", data_files={"train": ["llm-fine-tuning-docs/docs/README.md"]}, split="train")

own_dataset = own_dataset.train_test_split(test_size=0.2)

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("distilbert/distilgpt2")

def preprocess_function(examples):

return tokenizer([" ".join(x) for x in examples["text"]])

tokenized_own_dataset = own_dataset.map(

preprocess_function,

batched=True,

num_proc=4,

remove_columns=own_dataset["train"].column_names,

)

block_size = 128

def group_texts(examples):

# Concatenate all texts.

concatenated_examples = {k: sum(examples[k], []) for k in examples.keys()}

total_length = len(concatenated_examples[list(examples.keys())[0]])

if total_length >= block_size:

total_length = (total_length // block_size) * block_size

# Split by chunks of block_size.

result = {

k: [t[i : i + block_size] for i in range(0, total_length, block_size)]

for k, t in concatenated_examples.items()

}

result["labels"] = result["input_ids"].copy()

return result

lm_dataset = tokenized_own_dataset.map(group_texts, batched=True, num_proc=4)

Map (num_proc=4): 19%|█▉ | 21000/110255 [00:00<00:01, 54426.58 examples/s]Token indices sequence length is longer than the specified maximum sequence length for this model (1498 > 1024). Running this sequence through the model will result in indexing errors Map (num_proc=4): 37%|███▋ | 41000/110255 [00:00<00:01, 47593.35 examples/s]Token indices sequence length is longer than the specified maximum sequence length for this model (2635 > 1024). Running this sequence through the model will result in indexing errors Token indices sequence length is longer than the specified maximum sequence length for this model (1434 > 1024). Running this sequence through the model will result in indexing errors Map (num_proc=4): 44%|████▍ | 49000/110255 [00:01<00:01, 51860.43 examples/s]Token indices sequence length is longer than the specified maximum sequence length for this model (1265 > 1024). Running this sequence through the model will result in indexing errors Map (num_proc=4): 100%|██████████| 110255/110255 [00:02<00:00, 53913.21 examples/s] Map (num_proc=4): 76%|███████▌ | 21000/27564 [00:00<00:00, 33824.33 examples/s]Token indices sequence length is longer than the specified maximum sequence length for this model (1139 > 1024). Running this sequence through the model will result in indexing errors Map (num_proc=4): 100%|██████████| 27564/27564 [00:00<00:00, 37830.87 examples/s] Map (num_proc=4): 100%|██████████| 110255/110255 [00:06<00:00, 17160.73 examples/s] Map (num_proc=4): 100%|██████████| 27564/27564 [00:01<00:00, 16234.17 examples/s]

from transformers import DataCollatorForLanguageModeling

tokenizer.pad_token = tokenizer.eos_token

data_collator = DataCollatorForLanguageModeling(tokenizer=tokenizer, mlm=False)

from transformers import AutoModelForCausalLM, TrainingArguments, Trainer

model = AutoModelForCausalLM.from_pretrained("distilbert/distilgpt2")

training_args = TrainingArguments(

output_dir="my_awesome_eli5_clm-model",

eval_strategy="epoch",

learning_rate=2e-5,

weight_decay=0.01,

push_to_hub=True,

)

trainer = Trainer(

model=model,

args=training_args,

train_dataset=lm_dataset["train"],

eval_dataset=lm_dataset["test"],

data_collator=data_collator,

tokenizer=tokenizer,

)

trainer.train()

/var/folders/59/c32_bthx48jd9m2ym5m3tnpw0000j7/T/ipykernel_2713/2004268791.py:13: FutureWarning: `tokenizer` is deprecated and will be removed in version 5.0.0 for `Trainer.__init__`. Use `processing_class` instead. trainer = Trainer( 2%|▏ | 500/25233 [04:33<3:39:04, 1.88it/s]

{'loss': 1.0723, 'grad_norm': 3.0989766120910645, 'learning_rate': 1.9603693575872867e-05, 'epoch': 0.06}

4%|▍ | 1000/25233 [09:04<3:37:09, 1.86it/s]

{'loss': 0.8407, 'grad_norm': 2.9212241172790527, 'learning_rate': 1.9207387151745732e-05, 'epoch': 0.12}

6%|▌ | 1500/25233 [13:36<3:35:21, 1.84it/s]

{'loss': 0.784, 'grad_norm': 2.680738687515259, 'learning_rate': 1.8811080727618597e-05, 'epoch': 0.18}

8%|▊ | 2000/25233 [18:16<3:30:21, 1.84it/s]

{'loss': 0.7412, 'grad_norm': 3.1172192096710205, 'learning_rate': 1.8414774303491462e-05, 'epoch': 0.24}

10%|▉ | 2500/25233 [22:51<3:35:08, 1.76it/s]

{'loss': 0.7058, 'grad_norm': 2.4785091876983643, 'learning_rate': 1.8018467879364324e-05, 'epoch': 0.3}

12%|█▏ | 3000/25233 [27:25<3:16:53, 1.88it/s]

{'loss': 0.6976, 'grad_norm': 3.3812947273254395, 'learning_rate': 1.762216145523719e-05, 'epoch': 0.36}

14%|█▍ | 3500/25233 [31:58<3:25:01, 1.77it/s]

{'loss': 0.6665, 'grad_norm': 2.444471836090088, 'learning_rate': 1.7225855031110054e-05, 'epoch': 0.42}

16%|█▌ | 4000/25233 [36:31<3:10:33, 1.86it/s]

{'loss': 0.6396, 'grad_norm': 4.310383319854736, 'learning_rate': 1.682954860698292e-05, 'epoch': 0.48}

18%|█▊ | 4500/25233 [41:04<3:08:57, 1.83it/s]

{'loss': 0.6398, 'grad_norm': 3.099477767944336, 'learning_rate': 1.6433242182855787e-05, 'epoch': 0.54}

20%|█▉ | 5000/25233 [45:37<3:00:22, 1.87it/s]

{'loss': 0.627, 'grad_norm': 3.2062737941741943, 'learning_rate': 1.6036935758728653e-05, 'epoch': 0.59}

22%|██▏ | 5500/25233 [50:10<2:58:05, 1.85it/s]

{'loss': 0.6303, 'grad_norm': 2.4685802459716797, 'learning_rate': 1.5640629334601514e-05, 'epoch': 0.65}

24%|██▍ | 6000/25233 [54:41<2:46:10, 1.93it/s]

{'loss': 0.6037, 'grad_norm': 2.2792470455169678, 'learning_rate': 1.5244322910474381e-05, 'epoch': 0.71}

26%|██▌ | 6500/25233 [59:12<2:47:27, 1.86it/s]

{'loss': 0.6115, 'grad_norm': 2.518824338912964, 'learning_rate': 1.4848016486347246e-05, 'epoch': 0.77}

28%|██▊ | 7000/25233 [1:03:45<2:43:01, 1.86it/s]

{'loss': 0.5934, 'grad_norm': 3.007490396499634, 'learning_rate': 1.445171006222011e-05, 'epoch': 0.83}

30%|██▉ | 7500/25233 [1:08:19<2:39:42, 1.85it/s]

{'loss': 0.5867, 'grad_norm': 2.0084362030029297, 'learning_rate': 1.4055403638092975e-05, 'epoch': 0.89}

32%|███▏ | 8000/25233 [1:12:52<2:35:04, 1.85it/s]

{'loss': 0.5804, 'grad_norm': 2.803126096725464, 'learning_rate': 1.365909721396584e-05, 'epoch': 0.95}

33%|███▎ | 8411/25233 [1:21:18<2:29:29, 1.88it/s]

{'eval_loss': 0.5183552503585815, 'eval_runtime': 281.5923, 'eval_samples_per_second': 59.405, 'eval_steps_per_second': 7.426, 'epoch': 1.0}

34%|███▎ | 8500/25233 [1:22:06<2:30:28, 1.85it/s]

{'loss': 0.5757, 'grad_norm': 2.8604393005371094, 'learning_rate': 1.3262790789838705e-05, 'epoch': 1.01}

36%|███▌ | 9000/25233 [1:26:40<2:26:46, 1.84it/s]

{'loss': 0.5649, 'grad_norm': 2.918274402618408, 'learning_rate': 1.286648436571157e-05, 'epoch': 1.07}

38%|███▊ | 9500/25233 [1:31:13<2:20:54, 1.86it/s]

{'loss': 0.5528, 'grad_norm': 2.2247049808502197, 'learning_rate': 1.2470177941584433e-05, 'epoch': 1.13}

40%|███▉ | 10000/25233 [1:35:47<2:14:26, 1.89it/s]

{'loss': 0.5624, 'grad_norm': 2.8656771183013916, 'learning_rate': 1.2073871517457298e-05, 'epoch': 1.19}

42%|████▏ | 10500/25233 [1:40:13<2:09:19, 1.90it/s]

{'loss': 0.5577, 'grad_norm': 2.363588571548462, 'learning_rate': 1.1677565093330163e-05, 'epoch': 1.25}

44%|████▎ | 11000/25233 [1:44:54<2:51:31, 1.38it/s]

{'loss': 0.5538, 'grad_norm': 2.6085078716278076, 'learning_rate': 1.1281258669203028e-05, 'epoch': 1.31}

46%|████▌ | 11500/25233 [1:49:44<2:05:29, 1.82it/s]

{'loss': 0.5386, 'grad_norm': 1.7755399942398071, 'learning_rate': 1.0884952245075892e-05, 'epoch': 1.37}

48%|████▊ | 12000/25233 [1:54:17<1:55:37, 1.91it/s]

{'loss': 0.5471, 'grad_norm': 2.295924425125122, 'learning_rate': 1.0488645820948757e-05, 'epoch': 1.43}

50%|████▉ | 12500/25233 [1:58:49<1:54:52, 1.85it/s]

{'loss': 0.5364, 'grad_norm': 2.4912588596343994, 'learning_rate': 1.0092339396821624e-05, 'epoch': 1.49}

52%|█████▏ | 13000/25233 [2:03:20<1:50:13, 1.85it/s]

{'loss': 0.553, 'grad_norm': 2.9126155376434326, 'learning_rate': 9.696032972694487e-06, 'epoch': 1.55}

54%|█████▎ | 13500/25233 [2:07:54<1:45:07, 1.86it/s]

{'loss': 0.5356, 'grad_norm': 2.7289233207702637, 'learning_rate': 9.299726548567352e-06, 'epoch': 1.61}

55%|█████▌ | 14000/25233 [2:12:29<1:42:05, 1.83it/s]

{'loss': 0.5371, 'grad_norm': 2.1596028804779053, 'learning_rate': 8.903420124440217e-06, 'epoch': 1.66}

57%|█████▋ | 14500/25233 [2:17:01<1:34:18, 1.90it/s]

{'loss': 0.5245, 'grad_norm': 1.9386277198791504, 'learning_rate': 8.507113700313082e-06, 'epoch': 1.72}

59%|█████▉ | 15000/25233 [2:21:36<1:33:37, 1.82it/s]

{'loss': 0.5429, 'grad_norm': 2.0193569660186768, 'learning_rate': 8.110807276185947e-06, 'epoch': 1.78}

61%|██████▏ | 15500/25233 [2:26:18<1:29:11, 1.82it/s]

{'loss': 0.5278, 'grad_norm': 3.1613399982452393, 'learning_rate': 7.714500852058813e-06, 'epoch': 1.84}

63%|██████▎ | 16000/25233 [2:30:57<1:25:23, 1.80it/s]

{'loss': 0.5413, 'grad_norm': 2.0913147926330566, 'learning_rate': 7.318194427931678e-06, 'epoch': 1.9}

65%|██████▌ | 16500/25233 [2:35:30<1:18:04, 1.86it/s]

{'loss': 0.5308, 'grad_norm': 2.6738293170928955, 'learning_rate': 6.921888003804542e-06, 'epoch': 1.96}

67%|██████▋ | 16822/25233 [2:43:01<1:09:28, 2.02it/s]

{'eval_loss': 0.47672775387763977, 'eval_runtime': 275.9801, 'eval_samples_per_second': 60.613, 'eval_steps_per_second': 7.577, 'epoch': 2.0}

67%|██████▋ | 17000/25233 [2:44:43<1:33:29, 1.47it/s]

{'loss': 0.5241, 'grad_norm': 2.466259479522705, 'learning_rate': 6.525581579677407e-06, 'epoch': 2.02}

69%|██████▉ | 17500/25233 [2:49:27<1:08:50, 1.87it/s]

{'loss': 0.52, 'grad_norm': 2.0835952758789062, 'learning_rate': 6.129275155550271e-06, 'epoch': 2.08}

71%|███████▏ | 18000/25233 [2:53:57<1:06:55, 1.80it/s]

{'loss': 0.5215, 'grad_norm': 2.868283987045288, 'learning_rate': 5.732968731423136e-06, 'epoch': 2.14}

73%|███████▎ | 18500/25233 [2:58:29<1:00:12, 1.86it/s]

{'loss': 0.5221, 'grad_norm': 2.237812042236328, 'learning_rate': 5.336662307296002e-06, 'epoch': 2.2}

75%|███████▌ | 19000/25233 [3:03:00<56:46, 1.83it/s]

{'loss': 0.5159, 'grad_norm': 2.978661060333252, 'learning_rate': 4.9403558831688665e-06, 'epoch': 2.26}

77%|███████▋ | 19500/25233 [3:07:36<51:31, 1.85it/s]

{'loss': 0.5271, 'grad_norm': 2.6893765926361084, 'learning_rate': 4.544049459041732e-06, 'epoch': 2.32}

79%|███████▉ | 20000/25233 [3:12:21<50:29, 1.73it/s]

{'loss': 0.512, 'grad_norm': 2.426579236984253, 'learning_rate': 4.147743034914597e-06, 'epoch': 2.38}

81%|████████ | 20500/25233 [3:16:56<43:54, 1.80it/s]

{'loss': 0.5094, 'grad_norm': 2.783143997192383, 'learning_rate': 3.751436610787461e-06, 'epoch': 2.44}

83%|████████▎ | 21000/25233 [3:21:28<37:31, 1.88it/s]

{'loss': 0.5087, 'grad_norm': 2.052034616470337, 'learning_rate': 3.355130186660326e-06, 'epoch': 2.5}

85%|████████▌ | 21500/25233 [3:26:00<34:20, 1.81it/s]

{'loss': 0.5118, 'grad_norm': 2.041140556335449, 'learning_rate': 2.958823762533191e-06, 'epoch': 2.56}

87%|████████▋ | 22000/25233 [3:30:36<28:58, 1.86it/s]

{'loss': 0.5138, 'grad_norm': 3.8002045154571533, 'learning_rate': 2.5625173384060558e-06, 'epoch': 2.62}

89%|████████▉ | 22500/25233 [3:35:14<24:55, 1.83it/s]

{'loss': 0.5165, 'grad_norm': 2.558199167251587, 'learning_rate': 2.1662109142789204e-06, 'epoch': 2.68}

91%|█████████ | 23000/25233 [3:39:47<19:58, 1.86it/s]

{'loss': 0.5179, 'grad_norm': 2.4674603939056396, 'learning_rate': 1.7699044901517855e-06, 'epoch': 2.73}

93%|█████████▎| 23500/25233 [3:44:16<15:33, 1.86it/s]

{'loss': 0.5131, 'grad_norm': 3.23014497756958, 'learning_rate': 1.3735980660246504e-06, 'epoch': 2.79}

95%|█████████▌| 24000/25233 [3:48:50<11:10, 1.84it/s]

{'loss': 0.4952, 'grad_norm': 1.7720539569854736, 'learning_rate': 9.772916418975153e-07, 'epoch': 2.85}

97%|█████████▋| 24500/25233 [3:53:21<06:25, 1.90it/s]

{'loss': 0.5124, 'grad_norm': 2.939202070236206, 'learning_rate': 5.8098521777038e-07, 'epoch': 2.91}

99%|█████████▉| 25000/25233 [3:57:55<02:05, 1.86it/s]

{'loss': 0.5089, 'grad_norm': 2.8494760990142822, 'learning_rate': 1.84678793643245e-07, 'epoch': 2.97}

100%|██████████| 25233/25233 [4:04:46<00:00, 1.72it/s]

{'eval_loss': 0.4647159278392792, 'eval_runtime': 277.1876, 'eval_samples_per_second': 60.349, 'eval_steps_per_second': 7.544, 'epoch': 3.0}

{'train_runtime': 14686.5169, 'train_samples_per_second': 13.744, 'train_steps_per_second': 1.718, 'train_loss': 0.5803486357610933, 'epoch': 3.0}

TrainOutput(global_step=25233, training_loss=0.5803486357610933, metrics={'train_runtime': 14686.5169, 'train_samples_per_second': 13.744, 'train_steps_per_second': 1.718, 'total_flos': 6592908962562048.0, 'train_loss': 0.5803486357610933, 'epoch': 3.0})

prompt = "Somatic hypermutation allows the immune system to"

from transformers import pipeline

generator = pipeline("text-generation", model="my_awesome_eli5_clm-model")

generator(prompt)

Hardware accelerator e.g. GPU is available in the environment, but no `device` argument is passed to the `Pipeline` object. Model will be on CPU.

[{'generated_text': 'Somatic hypermutation allows the immune system to t i m e | '}]

from transformers import AutoModelForCausalLM, TrainingArguments, Trainer

from peft import LoraConfig, get_peft_model

model = AutoModelForCausalLM.from_pretrained("distilbert/distilgpt2").to("mps")

print(model)

GPT2LMHeadModel(

(transformer): GPT2Model(

(wte): Embedding(50257, 768)

(wpe): Embedding(1024, 768)

(drop): Dropout(p=0.1, inplace=False)

(h): ModuleList(

(0-5): 6 x GPT2Block(

(ln_1): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(attn): GPT2SdpaAttention(

(c_attn): Conv1D(nf=2304, nx=768)

(c_proj): Conv1D(nf=768, nx=768)

(attn_dropout): Dropout(p=0.1, inplace=False)

(resid_dropout): Dropout(p=0.1, inplace=False)

)

(ln_2): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(mlp): GPT2MLP(

(c_fc): Conv1D(nf=3072, nx=768)

(c_proj): Conv1D(nf=768, nx=3072)

(act): NewGELUActivation()

(dropout): Dropout(p=0.1, inplace=False)

)

)

)

(ln_f): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

)

(lm_head): Linear(in_features=768, out_features=50257, bias=False)

)

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("distilbert/distilgpt2")

def preprocess_function(examples):

return tokenizer([" ".join(x) for x in examples["text"]])

tokenized_own_dataset = own_dataset.map(

preprocess_function,

batched=True,

num_proc=4,

remove_columns=own_dataset["train"].column_names,

)

block_size = 128

def group_texts(examples):

# Concatenate all texts.

concatenated_examples = {k: sum(examples[k], []) for k in examples.keys()}

total_length = len(concatenated_examples[list(examples.keys())[0]])

if total_length >= block_size:

total_length = (total_length // block_size) * block_size

# Split by chunks of block_size.

result = {

k: [t[i : i + block_size] for i in range(0, total_length, block_size)]

for k, t in concatenated_examples.items()

}

result["labels"] = result["input_ids"].copy()

return result

lm_dataset = tokenized_own_dataset.map(group_texts, batched=True, num_proc=4)

from transformers import DataCollatorForLanguageModeling

tokenizer.pad_token = tokenizer.eos_token

data_collator = DataCollatorForLanguageModeling(tokenizer=tokenizer, mlm=False)

batch_size = 32

training_args = TrainingArguments(

output_dir="my_awesome_eli5_clm-model",

eval_strategy="epoch",

learning_rate=1e-5,

weight_decay=0.0001,

push_to_hub=False,

per_device_train_batch_size=batch_size,

)

peft_config = LoraConfig(

r=64,

lora_alpha=128,

lora_dropout=0.1,

# bias="none",

# task_type="CAUSAL_LM",

# target_modules=["q_proj", "k_proj", "v_proj", "o_proj"],

)

print(f"Trainable parameters for the base model: {model.num_parameters(only_trainable=True)}")

model = get_peft_model(model, peft_config)

print(f"Trainable parameters for the LoRA model: {model.num_parameters(only_trainable=True)}")

trainer = Trainer(

model=model,

args=training_args,

train_dataset=lm_dataset["train"],

eval_dataset=lm_dataset["test"],

data_collator=data_collator,

# tokenizer=tokenizer,

)

trainer.train()

Trainable parameters for the base model: 81912576 Trainable parameters for the LoRA model: 1179648

0%| | 38/50463 [00:43<16:01:46, 1.14s/it] 8%|▊ | 500/6309 [13:36<2:34:54, 1.60s/it] 8%|▊ | 500/6309 [13:36<2:34:54, 1.60s/it]

{'loss': 1.4383, 'grad_norm': 0.24702320992946625, 'learning_rate': 9.207481375812333e-06, 'epoch': 0.24}

16%|█▌ | 1000/6309 [26:56<2:20:18, 1.59s/it] 16%|█▌ | 1000/6309 [26:56<2:20:18, 1.59s/it]

{'loss': 1.2987, 'grad_norm': 0.4560306668281555, 'learning_rate': 8.414962751624663e-06, 'epoch': 0.48}

24%|██▍ | 1500/6309 [40:04<2:09:28, 1.62s/it] 24%|██▍ | 1500/6309 [40:04<2:09:28, 1.62s/it]

{'loss': 1.2303, 'grad_norm': 0.4419333040714264, 'learning_rate': 7.622444127436995e-06, 'epoch': 0.71}

32%|███▏ | 2000/6309 [53:29<1:52:40, 1.57s/it] 32%|███▏ | 2000/6309 [53:29<1:52:40, 1.57s/it]

{'loss': 1.1944, 'grad_norm': 0.6028203964233398, 'learning_rate': 6.829925503249327e-06, 'epoch': 0.95}

33%|███▎ | 2103/6309 [56:14<1:49:34, 1.56s/it]

33%|███▎ | 2103/6309 [1:01:00<1:49:34, 1.56s/it]

{'eval_runtime': 286.6126, 'eval_samples_per_second': 58.364, 'eval_steps_per_second': 7.296, 'epoch': 1.0}

40%|███▉ | 2500/6309 [1:11:21<1:33:49, 1.48s/it] 40%|███▉ | 2500/6309 [1:11:21<1:33:49, 1.48s/it]

{'loss': 1.1555, 'grad_norm': 0.535328209400177, 'learning_rate': 6.037406879061658e-06, 'epoch': 1.19}

48%|████▊ | 3000/6309 [1:23:45<1:21:37, 1.48s/it] 48%|████▊ | 3000/6309 [1:23:45<1:21:37, 1.48s/it]

{'loss': 1.1373, 'grad_norm': 0.6474247574806213, 'learning_rate': 5.24488825487399e-06, 'epoch': 1.43}

55%|█████▌ | 3500/6309 [1:36:40<1:14:35, 1.59s/it] 55%|█████▌ | 3500/6309 [1:36:40<1:14:35, 1.59s/it]

{'loss': 1.1318, 'grad_norm': 0.599763035774231, 'learning_rate': 4.452369630686321e-06, 'epoch': 1.66}

63%|██████▎ | 4000/6309 [1:50:01<1:00:56, 1.58s/it] 63%|██████▎ | 4000/6309 [1:50:01<1:00:56, 1.58s/it]

{'loss': 1.1228, 'grad_norm': 0.6193230748176575, 'learning_rate': 3.659851006498653e-06, 'epoch': 1.9}

67%|██████▋ | 4206/6309 [1:55:24<3:02:27, 5.21s/it]

67%|██████▋ | 4206/6309 [2:00:12<3:02:27, 5.21s/it]

{'eval_runtime': 287.9994, 'eval_samples_per_second': 58.083, 'eval_steps_per_second': 7.26, 'epoch': 2.0}

71%|███████▏ | 4500/6309 [2:07:42<44:25, 1.47s/it] 71%|███████▏ | 4500/6309 [2:07:43<44:25, 1.47s/it]

{'loss': 1.109, 'grad_norm': 0.7084806561470032, 'learning_rate': 2.8673323823109843e-06, 'epoch': 2.14}

79%|███████▉ | 5000/6309 [2:20:07<32:18, 1.48s/it] 79%|███████▉ | 5000/6309 [2:20:07<32:18, 1.48s/it]

{'loss': 1.1031, 'grad_norm': 0.5852240324020386, 'learning_rate': 2.074813758123316e-06, 'epoch': 2.38}

87%|████████▋ | 5500/6309 [2:32:31<20:01, 1.48s/it] 87%|████████▋ | 5500/6309 [2:32:31<20:01, 1.48s/it]

{'loss': 1.097, 'grad_norm': 0.5716857314109802, 'learning_rate': 1.2822951339356476e-06, 'epoch': 2.62}

95%|█████████▌| 6000/6309 [2:44:55<07:38, 1.48s/it] 95%|█████████▌| 6000/6309 [2:44:55<07:38, 1.48s/it]

{'loss': 1.0928, 'grad_norm': 0.6788768172264099, 'learning_rate': 4.897765097479791e-07, 'epoch': 2.85}

100%|██████████| 6309/6309 [2:52:49<00:00, 5.29s/it]

100%|██████████| 6309/6309 [2:57:37<00:00, 5.29s/it]

100%|██████████| 6309/6309 [2:57:37<00:00, 1.69s/it]

{'eval_runtime': 286.995, 'eval_samples_per_second': 58.287, 'eval_steps_per_second': 7.286, 'epoch': 3.0}

{'train_runtime': 10657.7698, 'train_samples_per_second': 18.939, 'train_steps_per_second': 0.592, 'train_loss': 1.17203244438903, 'epoch': 3.0}

TrainOutput(global_step=6309, training_loss=1.17203244438903, metrics={'train_runtime': 10657.7698, 'train_samples_per_second': 18.939, 'train_steps_per_second': 0.592, 'total_flos': 6775780751179776.0, 'train_loss': 1.17203244438903, 'epoch': 3.0})

Infer¶

prompt = "Somatic hypermutation allows the immune system to"

from transformers import pipeline

generator = pipeline("text-generation", model="my_awesome_eli5_clm-model")

generator(prompt)

Hardware accelerator e.g. GPU is available in the environment, but no `device` argument is passed to the `Pipeline` object. Model will be on CPU.

[{'generated_text': 'Somatic hypermutation allows the immune system to r a n g n a m e t o s u p p o r t / h e a d l e r s i n S c'}]

from transformers import AutoModelForCausalLM, TrainingArguments, Trainer

from peft import LoraConfig, get_peft_model

model = AutoModelForCausalLM.from_pretrained("Qwen/Qwen2.5-0.5B").to("mps")

print(model)

Qwen2ForCausalLM(

(model): Qwen2Model(

(embed_tokens): Embedding(151936, 896)

(layers): ModuleList(

(0-23): 24 x Qwen2DecoderLayer(

(self_attn): Qwen2SdpaAttention(

(q_proj): Linear(in_features=896, out_features=896, bias=True)

(k_proj): Linear(in_features=896, out_features=128, bias=True)

(v_proj): Linear(in_features=896, out_features=128, bias=True)

(o_proj): Linear(in_features=896, out_features=896, bias=False)

(rotary_emb): Qwen2RotaryEmbedding()

)

(mlp): Qwen2MLP(

(gate_proj): Linear(in_features=896, out_features=4864, bias=False)

(up_proj): Linear(in_features=896, out_features=4864, bias=False)

(down_proj): Linear(in_features=4864, out_features=896, bias=False)

(act_fn): SiLU()

)

(input_layernorm): Qwen2RMSNorm((896,), eps=1e-06)

(post_attention_layernorm): Qwen2RMSNorm((896,), eps=1e-06)

)

)

(norm): Qwen2RMSNorm((896,), eps=1e-06)

(rotary_emb): Qwen2RotaryEmbedding()

)

(lm_head): Linear(in_features=896, out_features=151936, bias=False)

)

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("Qwen/Qwen2.5-0.5B")

def preprocess_function(examples):

return tokenizer([" ".join(x) for x in examples["text"]])

tokenized_own_dataset = own_dataset.map(

preprocess_function,

batched=True,

num_proc=4,

remove_columns=own_dataset["train"].column_names,

)

block_size = 128

def group_texts(examples):

# Concatenate all texts.

concatenated_examples = {k: sum(examples[k], []) for k in examples.keys()}

total_length = len(concatenated_examples[list(examples.keys())[0]])

if total_length >= block_size:

total_length = (total_length // block_size) * block_size

# Split by chunks of block_size.

result = {

k: [t[i : i + block_size] for i in range(0, total_length, block_size)]

for k, t in concatenated_examples.items()

}

result["labels"] = result["input_ids"].copy()

return result

lm_dataset = tokenized_own_dataset.map(group_texts, batched=True, num_proc=4)

from transformers import DataCollatorForLanguageModeling

tokenizer.pad_token = tokenizer.eos_token

data_collator = DataCollatorForLanguageModeling(tokenizer=tokenizer, mlm=False)

Map (num_proc=4): 100%|██████████| 110255/110255 [00:02<00:00, 49880.67 examples/s] Map (num_proc=4): 100%|██████████| 27564/27564 [00:00<00:00, 42227.31 examples/s] Map (num_proc=4): 100%|██████████| 110255/110255 [00:02<00:00, 43033.31 examples/s] Map (num_proc=4): 100%|██████████| 27564/27564 [00:00<00:00, 41738.77 examples/s]

batch_size = 8

training_args = TrainingArguments(

output_dir="my_awesome_eli5_clm-model",

eval_strategy="epoch",

learning_rate=1e-5,

weight_decay=0.0001,

push_to_hub=False,

per_device_train_batch_size=batch_size,

)

peft_config = LoraConfig(

r=64,

lora_alpha=128,

lora_dropout=0.1,

# bias="none",

# task_type="CAUSAL_LM",

# target_modules=["q_proj", "k_proj", "v_proj", "o_proj"],

)

print(f"Trainable parameters for the base model: {model.num_parameters(only_trainable=True)}")

model = get_peft_model(model, peft_config)

print(f"Trainable parameters for the LoRA model: {model.num_parameters(only_trainable=True)}")

trainer = Trainer(

model=model,

args=training_args,

train_dataset=lm_dataset["train"],

eval_dataset=lm_dataset["test"],

data_collator=data_collator,

# tokenizer=tokenizer,

)

trainer.train()

Trainable parameters for the base model: 494032768 'NoneType' object has no attribute 'cadam32bit_grad_fp32' Trainable parameters for the LoRA model: 4325376

/Users/LSoica/work/AI/blog/.venv/lib/python3.12/site-packages/bitsandbytes/cextension.py:34: UserWarning: The installed version of bitsandbytes was compiled without GPU support. 8-bit optimizers, 8-bit multiplication, and GPU quantization are unavailable.

warn("The installed version of bitsandbytes was compiled without GPU support. "

5%|▌ | 500/9615 [39:28<4:37:32, 1.83s/it]

{'loss': 2.0717, 'grad_norm': 3.0585858821868896, 'learning_rate': 9.479979199167968e-06, 'epoch': 0.16}

10%|█ | 1000/9615 [3:29:29<24:59:46, 10.45s/it]

{'loss': 1.766, 'grad_norm': 3.2948787212371826, 'learning_rate': 8.959958398335933e-06, 'epoch': 0.31}

16%|█▌ | 1500/9615 [5:47:00<116:44:43, 51.79s/it] /Users/LSoica/work/AI/blog/.venv/lib/python3.12/site-packages/peft/utils/other.py:689: UserWarning: Unable to fetch remote file due to the following error (ProtocolError('Connection aborted.', RemoteDisconnected('Remote end closed connection without response')), '(Request ID: ee555c7e-30f5-4709-9944-a739ffe482a3)') - silently ignoring the lookup for the file config.json in Qwen/Qwen2.5-0.5B.

warnings.warn(

/Users/LSoica/work/AI/blog/.venv/lib/python3.12/site-packages/peft/utils/save_and_load.py:243: UserWarning: Could not find a config file in Qwen/Qwen2.5-0.5B - will assume that the vocabulary was not modified.

warnings.warn(

{'loss': 1.6554, 'grad_norm': 3.529787063598633, 'learning_rate': 8.439937597503902e-06, 'epoch': 0.47}

21%|██ | 2000/9615 [8:24:12<81:16:02, 38.42s/it]

{'loss': 1.6076, 'grad_norm': 3.6108458042144775, 'learning_rate': 7.919916796671867e-06, 'epoch': 0.62}

26%|██▌ | 2500/9615 [9:45:17<3:20:55, 1.69s/it] /Users/LSoica/work/AI/blog/.venv/lib/python3.12/site-packages/peft/utils/other.py:689: UserWarning: Unable to fetch remote file due to the following error (ProtocolError('Connection aborted.', RemoteDisconnected('Remote end closed connection without response')), '(Request ID: 67465ae8-bf4a-4ea0-9da0-bd78880fa4fd)') - silently ignoring the lookup for the file config.json in Qwen/Qwen2.5-0.5B.

warnings.warn(

/Users/LSoica/work/AI/blog/.venv/lib/python3.12/site-packages/peft/utils/save_and_load.py:243: UserWarning: Could not find a config file in Qwen/Qwen2.5-0.5B - will assume that the vocabulary was not modified.

warnings.warn(

{'loss': 1.5565, 'grad_norm': 3.8887996673583984, 'learning_rate': 7.399895995839834e-06, 'epoch': 0.78}

31%|███ | 3000/9615 [9:59:19<2:57:29, 1.61s/it]

{'loss': 1.5223, 'grad_norm': 3.7076895236968994, 'learning_rate': 6.879875195007801e-06, 'epoch': 0.94}

33%|███▎ | 3205/9615 [10:12:18<3:02:07, 1.70s/it]

{'eval_runtime': 448.7852, 'eval_samples_per_second': 14.198, 'eval_steps_per_second': 1.776, 'epoch': 1.0}

36%|███▋ | 3500/9615 [10:20:19<2:43:35, 1.61s/it]

{'loss': 1.4889, 'grad_norm': 4.151812553405762, 'learning_rate': 6.359854394175767e-06, 'epoch': 1.09}

42%|████▏ | 4000/9615 [10:33:46<2:30:17, 1.61s/it]

{'loss': 1.4773, 'grad_norm': 4.69639778137207, 'learning_rate': 5.839833593343734e-06, 'epoch': 1.25}

47%|████▋ | 4500/9615 [10:47:12<2:18:18, 1.62s/it]

{'loss': 1.4474, 'grad_norm': 4.382413864135742, 'learning_rate': 5.3198127925117e-06, 'epoch': 1.4}

52%|█████▏ | 5000/9615 [11:00:39<2:04:00, 1.61s/it]

{'loss': 1.4512, 'grad_norm': 5.491856575012207, 'learning_rate': 4.799791991679667e-06, 'epoch': 1.56}

57%|█████▋ | 5500/9615 [11:14:06<1:50:40, 1.61s/it]

{'loss': 1.4386, 'grad_norm': 4.476752281188965, 'learning_rate': 4.279771190847634e-06, 'epoch': 1.72}

62%|██████▏ | 6000/9615 [11:27:33<1:36:57, 1.61s/it]

{'loss': 1.4228, 'grad_norm': 3.8093013763427734, 'learning_rate': 3.759750390015601e-06, 'epoch': 1.87}

67%|██████▋ | 6410/9615 [11:47:02<2:02:51, 2.30s/it]

{'eval_runtime': 469.795, 'eval_samples_per_second': 13.563, 'eval_steps_per_second': 1.696, 'epoch': 2.0}

68%|██████▊ | 6500/9615 [11:49:54<1:28:43, 1.71s/it]

{'loss': 1.4119, 'grad_norm': 3.992978572845459, 'learning_rate': 3.239729589183568e-06, 'epoch': 2.03}

73%|███████▎ | 7000/9615 [12:03:33<1:10:30, 1.62s/it]

{'loss': 1.4053, 'grad_norm': 4.863268852233887, 'learning_rate': 2.7197087883515344e-06, 'epoch': 2.18}

78%|███████▊ | 7500/9615 [12:17:21<59:39, 1.69s/it]

{'loss': 1.3926, 'grad_norm': 4.6374831199646, 'learning_rate': 2.199687987519501e-06, 'epoch': 2.34}

83%|████████▎ | 8000/9615 [12:31:39<45:43, 1.70s/it]

{'loss': 1.3866, 'grad_norm': 5.041004657745361, 'learning_rate': 1.6796671866874676e-06, 'epoch': 2.5}

88%|████████▊ | 8500/9615 [12:45:34<29:53, 1.61s/it]

{'loss': 1.3873, 'grad_norm': 4.435614109039307, 'learning_rate': 1.1596463858554343e-06, 'epoch': 2.65}

94%|█████████▎| 9000/9615 [12:59:02<16:36, 1.62s/it]

{'loss': 1.3907, 'grad_norm': 4.779606342315674, 'learning_rate': 6.396255850234009e-07, 'epoch': 2.81}

99%|█████████▉| 9500/9615 [13:12:31<03:05, 1.61s/it]

{'loss': 1.3867, 'grad_norm': 4.215789318084717, 'learning_rate': 1.1960478419136767e-07, 'epoch': 2.96}

100%|██████████| 9615/9615 [13:23:21<00:00, 4.13s/it]

{'eval_runtime': 452.6747, 'eval_samples_per_second': 14.076, 'eval_steps_per_second': 1.761, 'epoch': 3.0}

100%|██████████| 9615/9615 [13:23:28<00:00, 5.01s/it]

{'train_runtime': 48208.209, 'train_samples_per_second': 1.595, 'train_steps_per_second': 0.199, 'train_loss': 1.507183917239502, 'epoch': 3.0}

TrainOutput(global_step=9615, training_loss=1.507183917239502, metrics={'train_runtime': 48208.209, 'train_samples_per_second': 1.595, 'train_steps_per_second': 0.199, 'total_flos': 2.1396524049432576e+16, 'train_loss': 1.507183917239502, 'epoch': 3.0})

Infer¶

prompt = "What is a LLM?"

messages = [

{"role": "system", "content": "You are an AI assistant created by LS."},

{"role": "user", "content": prompt}

]

text = tokenizer.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True

)

model_inputs = tokenizer([text], return_tensors="pt").to(model.device)

generated_ids = model.generate(

**model_inputs,

max_new_tokens=512

)

generated_ids = [

output_ids[len(input_ids):] for input_ids, output_ids in zip(model_inputs.input_ids, generated_ids)

]

response = tokenizer.batch_decode(generated_ids, skip_special_tokens=True)[0]

print(response)

Setting `pad_token_id` to `eos_token_id`:None for open-end generation.

A LLM is a program that can be used to generate human readable text based on a sequence of input tokens. It is a type of natural language processing (nlp) algorithm that can be used to generate human readable text based on a sequence of input tokens. LLMs are commonly used for a variety of tasks, such as text generation, question answering, and information retrieval. They are often used in natural language processing applications where a human user needs to be able to understand and interpret the generated text. LLMs can be trained on large amounts of text data to improve their performance and accuracy.